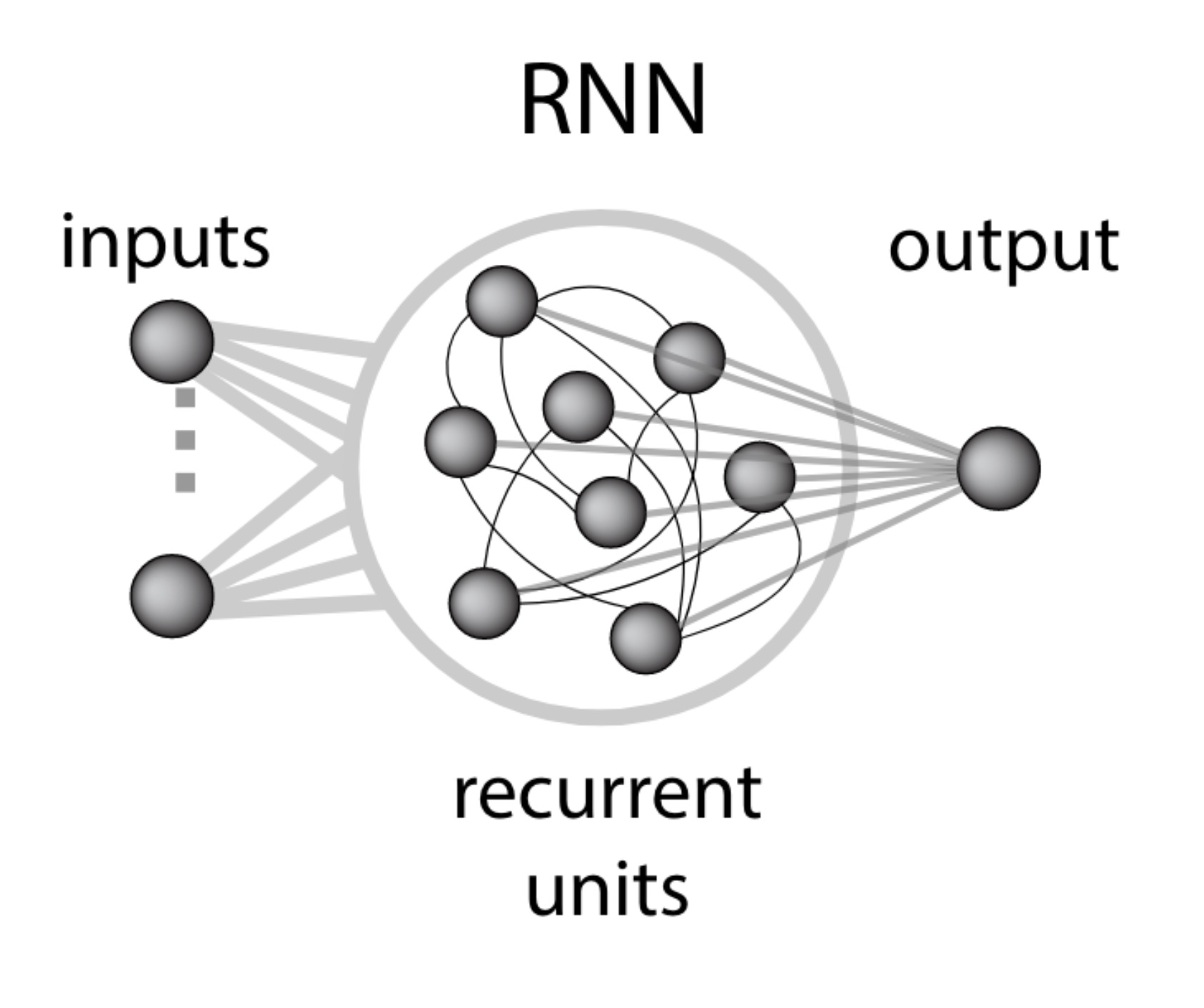

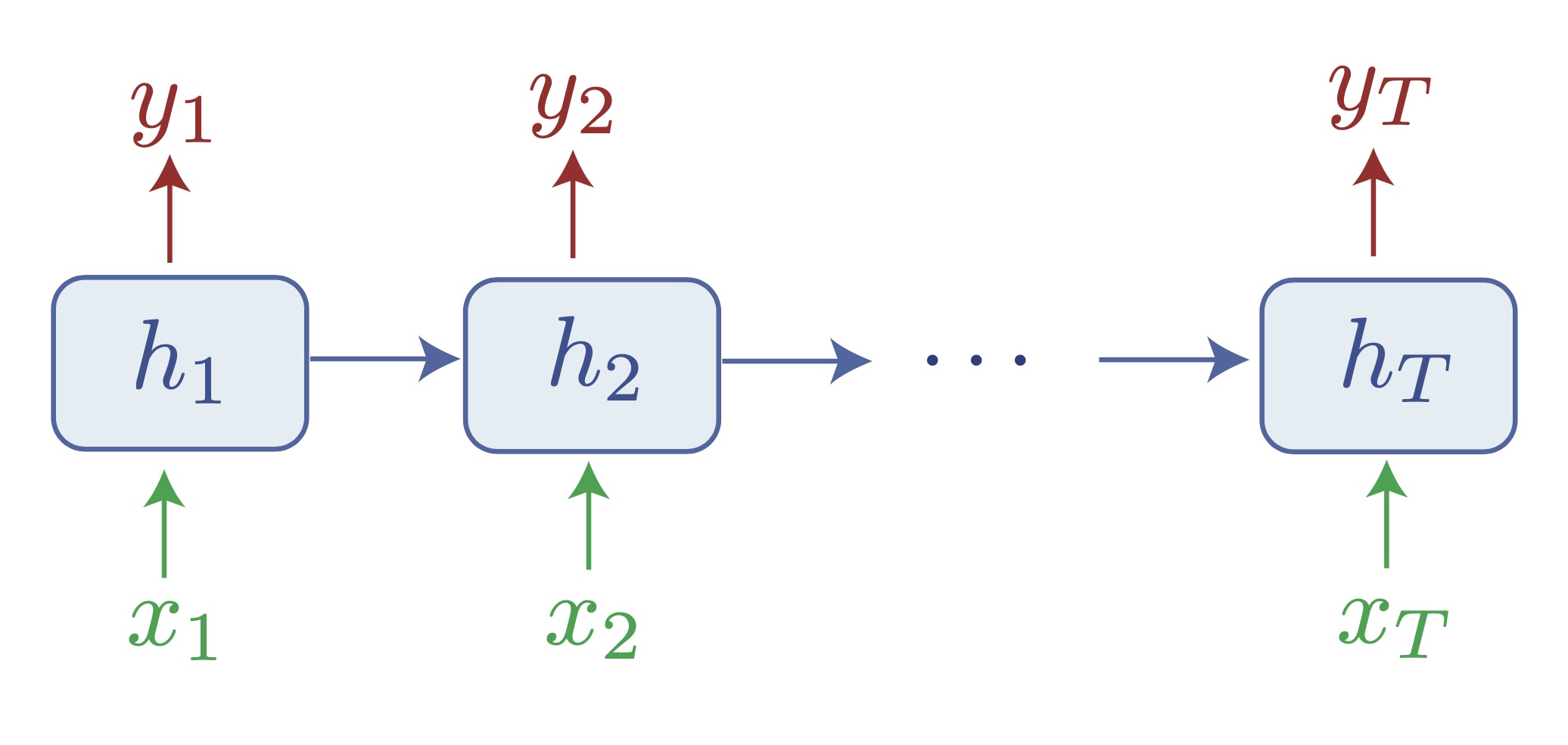

Transformers have completely taken by storm the field of sequence modelling with deep networks, becoming the standard for text processing, video, even images. RNNs that were once a very active engineering field have slowly faded into the void. All of them? No, some RNNs are bravely fighting back to claim state-of-the-art results in sequence tasks. The most suprising part?