Merci à Hugo Gruson pour ses commentaires utiles sur cette traduction! Le logiciel libre Pandoc par John MacFarlane est un outil très utile : par exemple, Yanina Bellini Saibene, community manager de rOpenSci, a récemment demandé à Maëlle si elle pouvait convertir un document Google en livre Quarto.Maëlle a répondu à la demande en combinant Pandoc (conversion de docx en HTML puis en Markdown par le biais de pandoc::pandoc_convert()) et

Messages de Rogue Scholar

The Pandoc CLI by John MacFarlane is a really useful tool: for instance, rOpenSci community manager Yanina Bellini Saibene recently asked Maëlle whether she could convert a Google Document into a Quarto book.Maëlle solved the request with a combination of Pandoc (conversion from docx to HTML then to Markdown through pandoc::pandoc_convert()) and XPath.You can find the resulting experimental package quartificate on GitHub.Pandoc is not only

How to keep up with rOpenSci?We agree that we’re doing so much good work that it’s hard.

Our website is based on Markdown content rendered with Hugo.Markdown content is in some cases knit from R Markdown, but with less functionality than if one rendered R Markdown to html as in the blogdown default.In particular, we cannot use the usual BibTex + CSL + Pandoc-citeproc dance to handle a bibliography.Thankfully, using the rOpenSci package RefManageR, we can still make our own bibliography from a BibTeX file without formatting

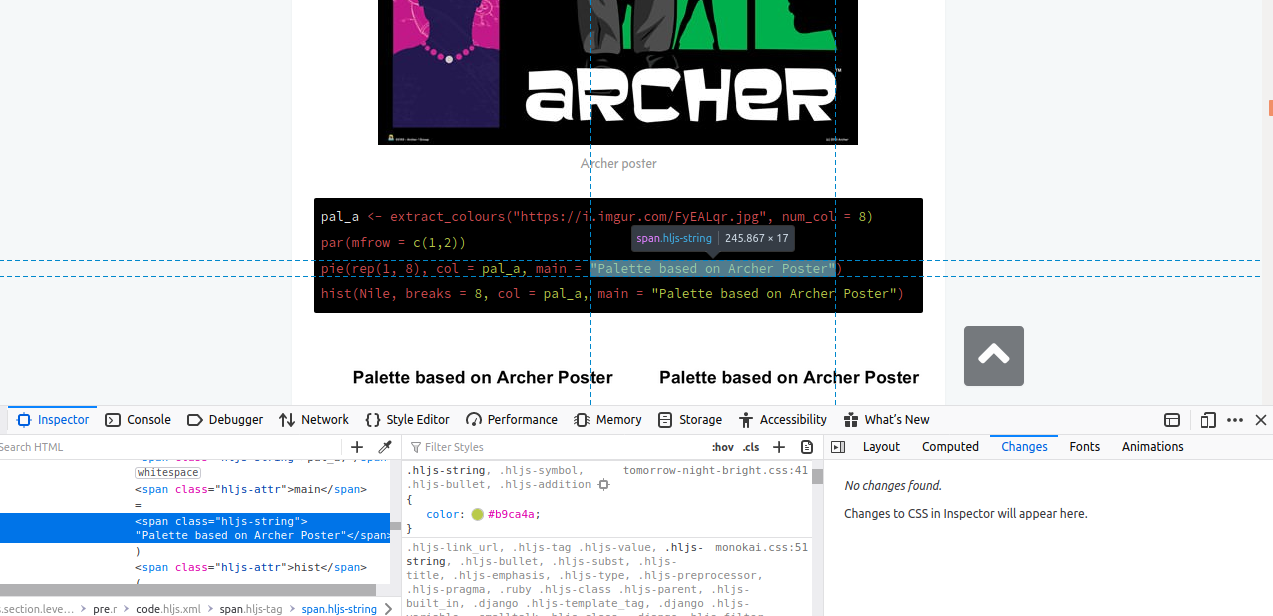

Thanks to a quite overdue update of Hugo on our build system 1 , our website can now harness the full power of Hugo code highlighting for Markdown-based content.What’s code highlighting apart from the reason behind a tongue-twister in this post title?In this post we shall explain how Hugo’s code highlighter, Chroma, helps you prettify your code (i.e. syntax highlighting ), and accentuate parts of your code (i.e. line

Last year we reported on the joy of using commonmark and xml2 to parseMarkdown content, like the source of this website built withHugo, in particular to extractlinks,at the time merely to count them. How about we go a bit further and usethe same approach to find links to be fixed? In this tech note we shallreport our experience using R to find broken/suboptimal links and fixthem.What is a bad URL?