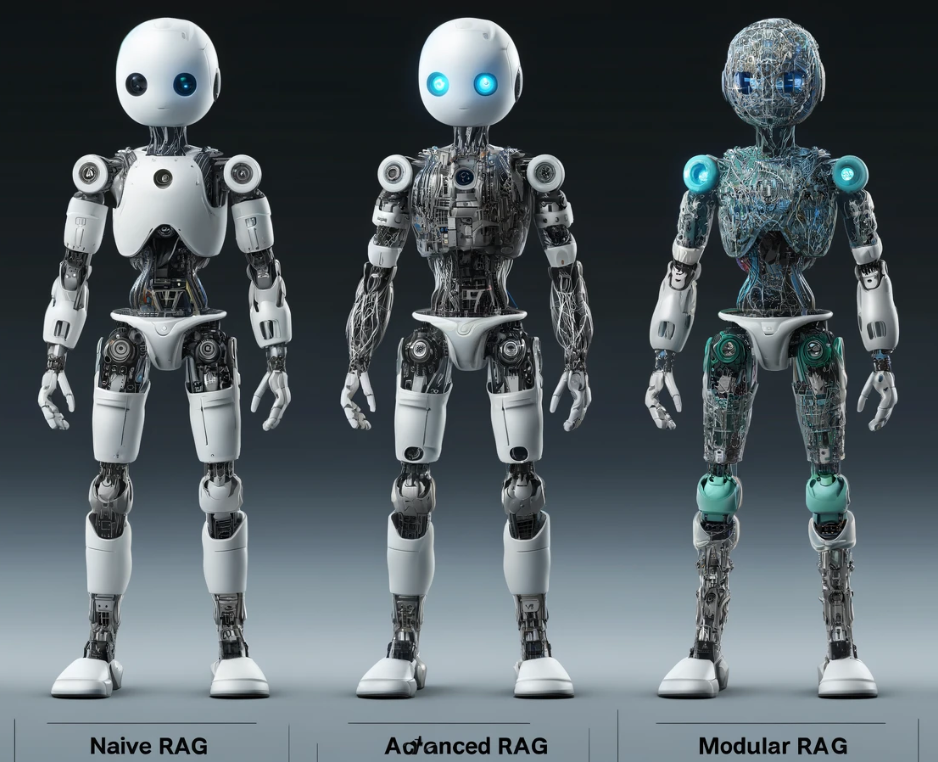

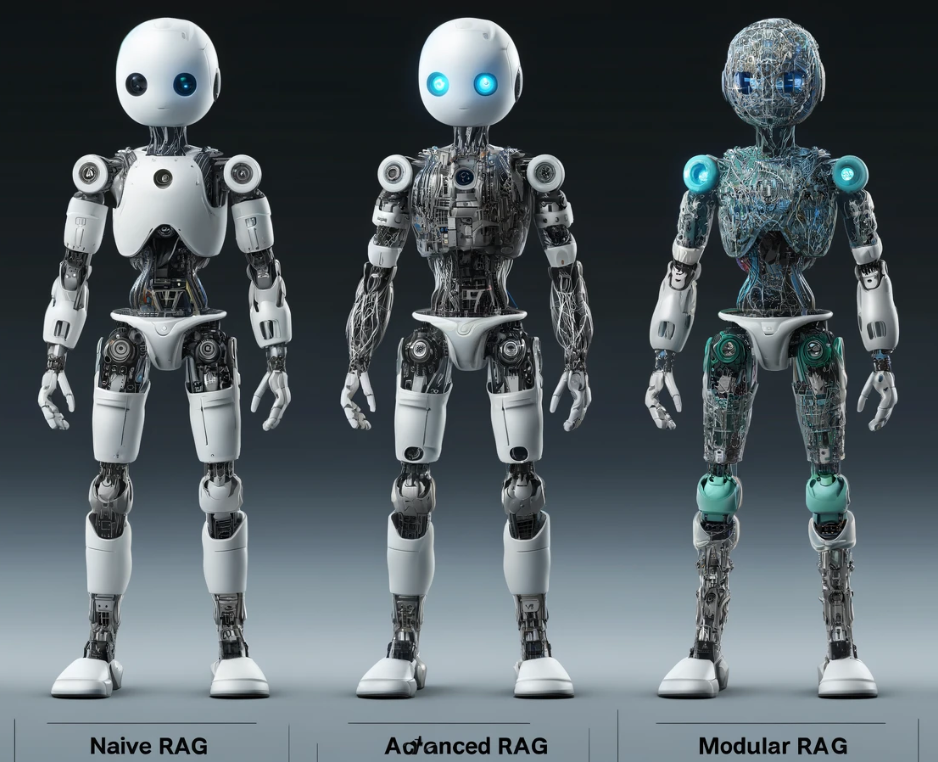

From Naive to Modular: Tracing the Evolution of Retrieval-Augmented Generation Author · Vaibhav Khobragade ( ORCID: 0009–0009–8807–5982) Introduction Large Language Models (LLMs) have achieved remarkable success.

From Naive to Modular: Tracing the Evolution of Retrieval-Augmented Generation Author · Vaibhav Khobragade ( ORCID: 0009–0009–8807–5982) Introduction Large Language Models (LLMs) have achieved remarkable success.

Supervised Fine-tuning, Reinforcement Learning from Human Feedback and the latest SteerLM Author · Xuzeng He ( ORCID: 0009–0005–7317–7426) Introduction Large Language Models (LLMs), usually trained with extensive text data, can demonstrate remarkable capabilities in handling various tasks with state-of-the-art performance. However, people nowadays typically want something more personalised instead of a general solution.

Attention mechanism not getting enough attention Author Dhruv Gupta ( ORCID : 0009–0004–7109–5403) Introduction As discussed in this article, RNNs were incapable of learning long-term dependencies. To solve this issue both LSTMs and GRUs were introduced. However, even though LSTMs and GRUs did a fairly decent job for textual data they did not perform well.

Large Language Models for Fake News Generation and Detection Author Amanda Kau ( ORCID : 0009–0004–4949–9284) Introduction In recent years, fake news has become an increasing concern for many, and for good reason. Newspapers, which we once trusted to deliver credible news through accountable journalists, are vanishing en masse along with their writers.

The pews of the Internet Archive back in 2018. tl;dr: Posts on this blog are now automatically archived, indexed and full-text searchable through The Rogue Scholar . The jury might still be out on whether the small or indie web will make a comeback, but I’ve personally enjoyed posting more on my blog here in recent months.

I’ve been learning recently about how to approximate functions by low-degree polynomials. This is useful in fully homomorphic encryption (FHE) in the context of “arithmetic FHE” (see my FHE overview article), where the computational model makes low-degree polynomials cheap to evaluate and non-polynomial functions expensive or impossible. In browsing the state of the art I came across two interesting things.

por Alicia Salmerón En 1892 fueron publicadas las Memorias del general Porfirio Díaz. La obra era un primer tomo de lo que, seguramente, se pensó como una obra más amplia. Había sido editada por Matías Romero, el hábil diplomático y, en el momento secretario de Hacienda de don Porfirio.

As iconic as Brachiosaurus altithorax is, it’s known from surprisingly little material.

Businesses have emerged that sell authorship to aspiring doctors to make their residency applications more competitive.

About two years ago, I switched teams at Google to focus on fully homomorphic encryption (abbreviated FHE, or sometimes HE). Since then I’ve got to work on a lot of interesting projects, learning along the way about post-quantum cryptography, compiler design, and the ins and outs of fully homomorphic encryption.