GPT4+Code interpreter - Playing Electronic Resources Librarian and Research Data Management Librarian roles

I've been a subscriber of OpenAI's ChatGPT plus for a while though I have been struggling to justify it to myself for a while until recently.

The main advantages of ChatGPT is as follows

Access to latest GPT4 model as opposed to GPT3.5 in the free version

Of these various reasons, surprisingly the main reason to subscribe ChatGPT plus is for access to the Code interpreter.

I discuss the reasons why the other features are not good reasons to subscribe and then end by showing you the power of the Code interpreter by letting it play

a) Electronic Resource Serials Librarian - deciding what journals to subscribe and cancel through the power of data analysis of Unsub data (Jump straight to section)

b) Research Data Librarian - giving advice on Research Data quality of deposits (jump straight to section)

It is still early days for these tools of course, but they are showing great potential, and I won't be surprised that years down the road tools based on these capabilities will become standard once they become small enough that you can run it locally. For example, automated research data quality checks might become standard either as separate local tools that researchers use prior to data submission (preferred), or they may be checked as they deposit data into functionality integrated into Data Repository systems.

Another unexpected implication is that it pays dividends if you know a little bit of Python, particularly common packages like Pandas used for data analysis. While it is true that GPT4 can help you code now by trying out the code directly in the code sandbox, it is still very helpful to be able to peek under the hook to see what the code is actually doing.

In the example below, I show a case where GPT4 is doing data analysis in a way that isn't strictly wrong but might surprise you and only looking at the Python code made me realize it was analying in a different way then what I initially interpreted the response to be doing!

Should I subscribe to ChatGPT Plus just for GPT4?

On the face of it, given the much better capabilities of GPT4 over GPT3.5 this seems the obvious reason for subscribing.

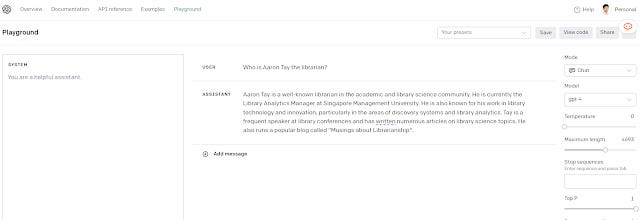

However, there is a much cheaper option. If all you want is the GPT4 model, you can just register to access GPT4 via OpenAI APIs.

Worried that you have no coding capabilities? You don't need any. Just use the "Playground".

Use GPT4 via APIs on OpenAI Playground (no code method)

It is important to note that there are reasonable suspicions the GPT4 models you get via API are not exactly the same as what is used in ChatGPT plus. Some of the differences noted could be down to differences in system messages or what is being passed from prompt to prompt. The default GPT4 models available via API also have a context length of 8k tokens, but it seems the ChatGPT plus version may not allow prompts of that length. But these are still relatively small differences. More importantly, there is a controversy right now on whether OpenAI is dumbing down GPT4 and whether this affects the API version less than the ChatGPT one. Some suspect the one you get via ChatGPT as opposed to the API is dumbed down more.

Why use this over ChatGPT plus? Mainly for cost reasons. With APIs, you are charged per use. As of the time of writing you are charged about 0.03 USD per 1000 tokens. In comparison, ChatGPT plus costs $20 USD per month. If you do the math, unless you are a very heavy user, it's almost always cheaper to get access via the API.

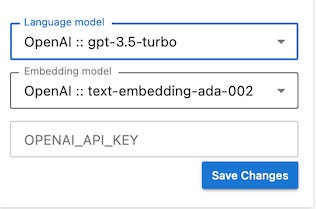

Secondly with access via API, you can use various webapps and projects such as AutoGPT that leverage OpenAI capabilities, allowing you to run them just by entering your API key. For example, I recently ran into an announcement about Jupyter AI (which is an official subproject of Project Jupyter) that

brings generative artificial intelligence to Jupyter notebooks, giving users the power to explain and generate code, fix errors, summarize content, ask questions about their local files, and generate entire notebooks from a natural language prompt.

It goes on to say the generative AI support is model neutral and you can use Large Language Models (LLMs) from OpenAI, Cohere, Anthropic etc. Simply enter your API key from OpenAI and you are set!

Enter your OpenAI API Key

Access to ChatGPT Plugins

Access to ChatGPT Plugins seems to be far more exciting and as I write this there are just over 800 plugins including many Scholarly related ones that involve searching Scholarly sources. Some are decent like the official Wikipedia ChatGPT plugin that searches Wikipedia for relevant answers and uses it to ground answers or Scholarly related ones like ScholarAI that do the same but search over Scholarly metadata like Semantic Scholar/OpenAlex, but plenty are just unreliable or even plain broken.

But at the end of the day, many of these plugins, particularly Scholarly ones don't really work much better than just using Bing Chat or a specialized academic search that uses LLM like Elicit.org, Scispace, Scite assistant.

Even ones that are quite flexible like Zapier which allows you to do all sorts of workflows I end up not using because I am not in the ChatGPT interface all the time, though this may change if it morphs into my personal assistant. I am not the only one that thinks ChatGPT plugins aren't worth paying for.

ChatGPT browsing plugin is a mess

Talking about search, ChatGPT plus also gives you OpenAI's Web browsing plugin.

This is another disappointment. When I first gained access to it, I heard it was using an implementation similar to WebGPT and it was pretty cool to watch it search, click on specific results, go back, search again etc.

But the reality is it was terribly slow and unstable, and it would often just error out.

Got access to web browse plugin in Chatgpt. First impression is slow, much slower than Bing Chat, Perplexity, maybe the results are better? I also notice the citations seem to be provided per paragraph, rather than per sentence. (1) pic.twitter.com/NIN3Vq7qQ0

— Aaron Tay (@aarontay) May 19, 2023

They later switched it to an implementation closer to the faster and less computational expensive Bing Chat, but even that ran into a snag. As I write this, this plugin is disabled because people found out that they could use it to bypass paywalls (imagine if this could be done for academic papers!)

In any case you might also wonder why use this when you can use Bing Chat for free which uses GPT4 anyway? The answer is Bing Chat doesn't always use GPT-4 and may use a lesser model (presumably cheaper) for queries it thinks is "easy". Some like Ethan Mollick suggest that to always use GPT4 turn on creative/precise mode rather than balanced mode.

The best reason to pay for ChatGPT plus - Code interpreter

Currently though I think the best reason to pay for ChatGPT plus is for the code-interpreter.

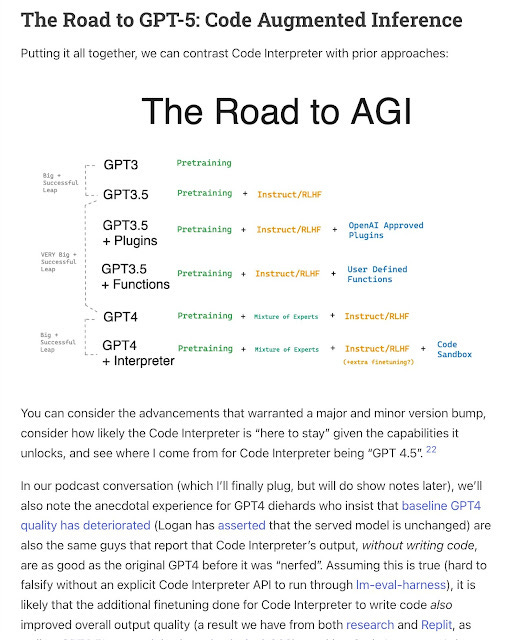

In fact, some think code-interpreter brings such significant capabilities that it should be called GPT4.5 just as ChatGPT is GPT3.5!

🆕 Code Interpreter == GPT 4.5https://t.co/IlNRULoT7C

(or, making GPT4 1,000x better with One Weird Trick)

17,000 people tuned in to our emergency @latentspacepod with @simonw and @altryne!

I explain why Code Interpreter is a massive leap forward toward GPT5, why… pic.twitter.com/qI6t6iWXG6— swyx (@swyx) July 10, 2023

Is Code-interpreter - GPT4.5?

https://twitter.com/swyx/status/1678512823457165312/photo/2

The author of the post above happens to be a fellow Singaporean and seems to be deep in the LLM space argues that GPT4+interpreter is as big a jump from GPT4 as GPT3.5 which is actually GPT3+Instruct/RLHF is from GPT3.

So, what does code interpreter do?

As the above image suggests it adds a "code-sandbox".

OpenAI describes it as

We provide our models with a working Python interpreter in a sandboxed, firewalled execution environment, along with some ephemeral disk space. Code run by our interpreter plugin is evaluated in a persistent session that is alive for the duration of a chat conversation (with an upper-bound timeout) and subsequent calls can build on top of each other. We support uploading files to the current conversation workspace and downloading the results of your work.

If you are a non-coder let me, explain.

GPT4 like most language models can help with coding, however like any human coder, they will provide code based on their own "understanding" and "knowledge". You would still need to run the code and see if it works. More advanced models like GPT4, can even correct their own mistakes. To do so you need to run the code yourself and if the code errors out, copy, and paste the error message into GPT4 for it to try to fix the code and then retry the newly suggested code.

Adding the code-sandbox means you the human can skip this step! GPT4 will automatically try out the code in a Python environment to see if the code works and keep trying different approaches until it works!

Note this applies only for Python, if you ask it to code in R, or give it R code it will not be able to do this.

As GPT4 tries over and over different code in response to the output from the sandbox to try to accomplish the objective given in the prompt, it reminds me of the AutoGPT type apps that were all the rage a few months ago. With this feature GPT4 is still not fully automatous but seems to be a step closer as it responds to the environment (code sandbox output) as it tries to achieve its goals.

For example, with the code interpreter you can try to upload say a csv (or even multiple files in the latest update) and ask ChatGPT4 to try to analyze the results and it will do so using Python. You can even examine the Python code used to do the calculations and produce plots and diagrams. Finally, you can output these generated figures, plots or even data.

WARNING: DO NOT USE CHATGPT WITH ANY SENSITIVE DATA.

This is good advice even if you are just typing prompts with ChatGPT free, but with ChatGPT Plus and the code interpreter capabilities you should be even more careful about uploading datasets.

GPT4 -Code-Interpreter plays Electronic Resource Serials Librarian

I am going to use a dataset used by UnSub extender project - to test GPT4. If you are not familiar with UnSub, it is basically a analytical service provided by OurResearch UnSub service that helps libraries decide what to unsubscribe based on a variety of factors including cost per use, % of OA, ILL cost and more.

I can share the chatgpt chat here but you may not be able to see the images generated.

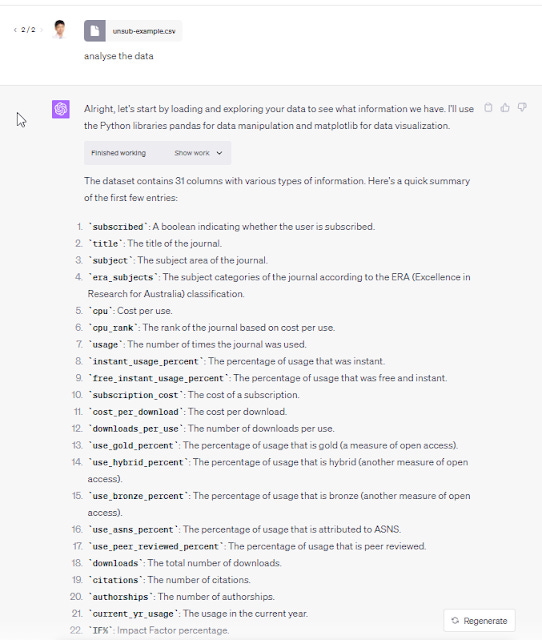

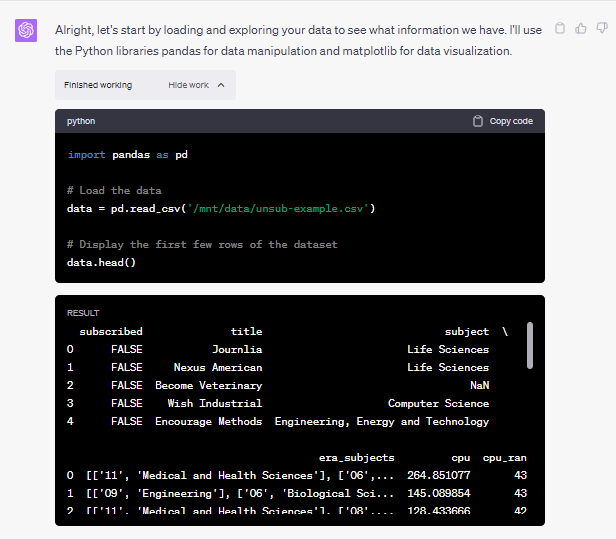

First, I unload the file unsub-example.csv and prompt GPT-4 with the generic "analyse the data" prompt and it nicely analyses the csv file, listing the main columns and what each columns contains.

This is a bit more impressive than it seems because I only unloaded the data file in csv and did not include a data dictionary. Nor did the csv file include descriptions of the column, so it is looking at each column and interpreting it as a human would be and it clearly seems to "understand" what each column means.

This characteristic of GPT4+code interpreter to "understand" is extremely impressive and has led to worries that entry level analyst jobs will be automated away, though not everyone agrees.

If a human analyst were doing the analysis, they would open the csv file in something like Excel, Tableau etc, for GPT4+code interpreter it is loading the csv with Python Pandas into dataframes.

If you click on the "show work" you can see exactly what is happening under the hood with the line

data=pd.read_csv(.....)

Once it loads in the csv into a Panda dataframe, like a good analyst, it tries to get a sense of the data by checking for missing values, doing basic description statistics etc.

Not too bad the missing values situation so let's jump into analysis. In some past attempts, I have asked it to suggest things to analyse and it was pretty good but here let's ask it something in a vague way.

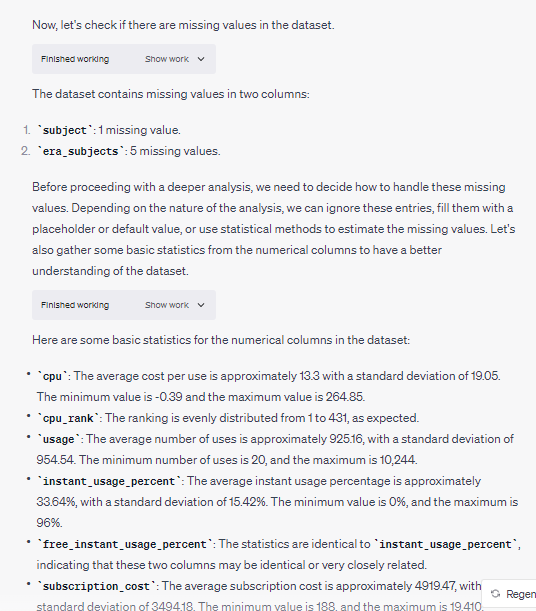

My prompt the sloppy "compare cpu vs subscribe or not" and it correctly determines what I do and chooses to visualize the result using a box plot (a very reasonable choice). The explanation is reasonable and correct as well.

Let's push this to see if it really understands what it is analysing.

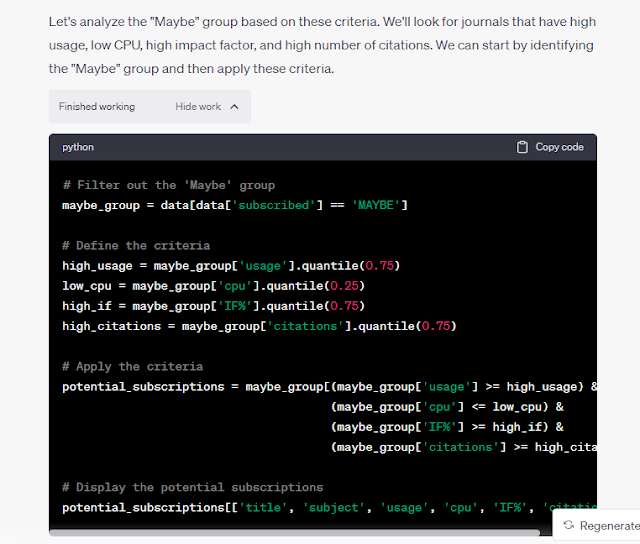

My prompt - "Consider the Maybe group, are there any particular journals you think might be worth subscribing to. Explain why?"

It independently comes up with four reasonable criteria and reasons why

Usage: If a journal is frequently used, it might be worth subscribing to, as this indicates high demand.

Cost Per Use (CPU): If the CPU of a journal is low, it might be more cost-effective to subscribe to it rather than pay per use.

Impact Factor (IF%): If the journal has a high impact factor, it might be considered more valuable as it is likely to contain high-quality, impactful research.

Citations: If a journal is frequently cited, it might be considered more influential and therefore worth subscribing to.

It also gives a nice disclaimer on why these four criteria may not be enough.

Of course, library professionals reading this might consider other factors particularly amount of Open Access etc but if you query GPT4 it clearly "understands" those can be used too and it will happily use it if you ask.

That said, I might want to quibble with the need to have both "Usage" and "Cost per Use" together and perhaps use only Cost per use? But I guess you may want to subscribe if Usage is high enough, even if the cost per use is high, you still want to subscribe?

GPT4 tries to find journals that match all four criteria, that is journals in the "Maybe" category and has "high usage", "Low cost per use", "high impact factor" and "high citations".

But what does "high" or "low" mean? Sometimes GPT4 might explain, but in this case it does not. You can ask of course, but how do you know it is simply making up what it is doing? That's why you can or should peek at the "working" in the code...

Looking at the actual code, you can see what exactly the criteria are. e.g. high is 75th percentile. But is the code doing what it should be? The fact that it executes doesn't mean it isn't doing it "wrong" (or at least not the way you expected)! I'll leave this as an exercise for the reader.... :)

For example, based on my meager python skills it looks like the analysis is calculating quartiles only using data from the "maybe" group. Does this make sense? Or should you calculate percentiles based on all journals? Either way looking at the code helps clarify what is happening.

Based on its criteria, it determines that the criteria are too strict, and no journal meets all four criteria of high usage, low Cost Per use, high Impact Factor and citations. It tries to loosen it to three out of four to no avail...

GPT4 -Code-Interpreter plays Research Data Management

Besides playing data analyst what else can you do with Code-Interpreter? One of the questions we faced at our institution when we implemented a Research Data Repository was how much checking we would do for data deposits in terms of data quality. It was extremely time consuming to do and often required domain expertise to evaluate and understand the data deposited. Even for relatively small and straight forward datasets it would take time to look through the different files etc.

Could GPT4-Code Interpreter do some of it? Let's see.

First off, GPT4-Code Interpreter allows you to upload not just csv but also scripts and even zipped files. So what I did was to get a zipped file of a dataset that was already deposited into our data repository and then load it into GPT4.

Interestingly, it initially ran into problems trying to unzip the file, complaining about leading and trailing space in the folder names, it managed to fix it though (which is typical of code-interpreter)

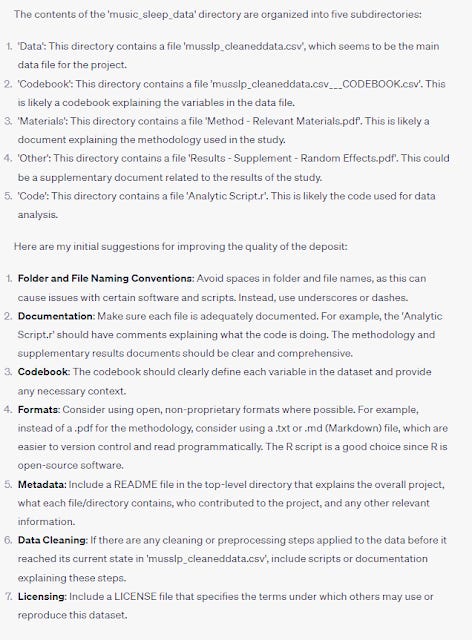

It then analyses all the unzipped files

I haven't read read this study, but it seems to be making reasonable statements, probably helped by the fact it does have access to the codebook.

The advice is what you expect a RDM librarian would say (I'm sure it has a ton of pretraining over numerous Libguides) but I don't think it is specific to the dataset (though #1 seems consistent with its problems to unzip but I'm not sure if that's really the problem), given it hasn't already "looked into each file".

I particularly like point 6, complaining that there was no raw data provided , nor the cleaning scripts used to transform the result to the final dataset that was used,

The next part it tries to do exactly that.

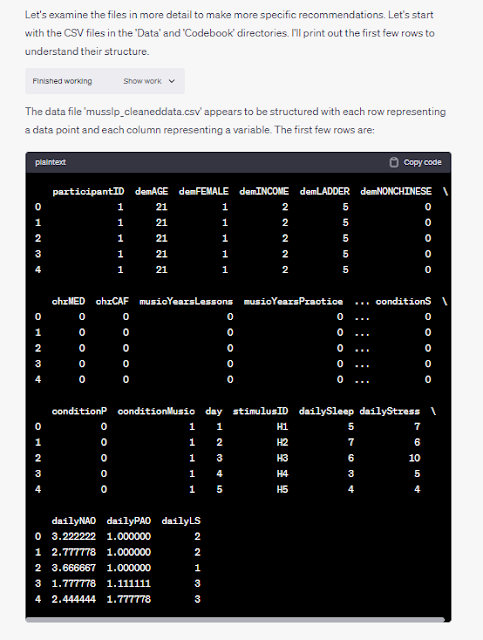

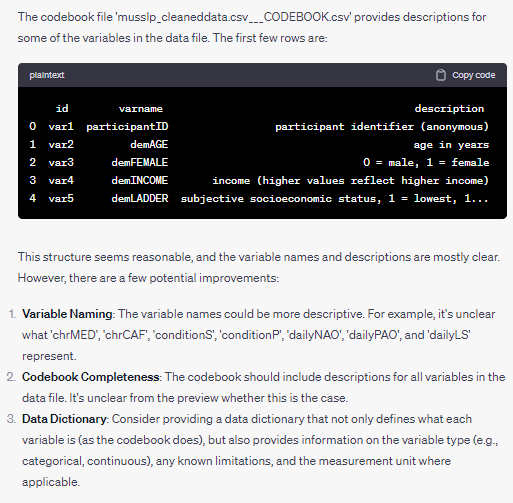

It first starts with the csv files representing the data file (musslp_cleaneddata.csv) and the codebook (musslp_cleaneddata.csv___CODEBOOK.csv).

The complaint on Variable names not being descriptive looks spot on to me. Same for having more information in the Codebook.

It's inability to tell if the Codebook is complete is disappointing. This is because due to the number of columns the preview doesn't show all the columns, but I would expect GPT4 to be able to just expand and look at it. (It probably can if you ask). In some other smaller datasets, I tried it could reconcile the columns of the data file with the data dictionary/codebook.

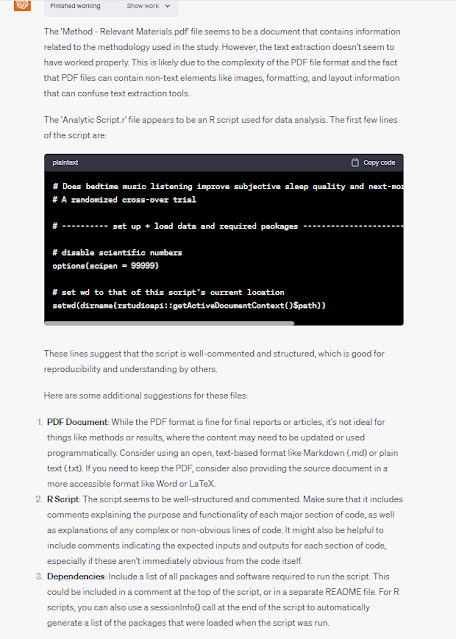

Next it tries to look at the PDF file (Relevant Materials.pdf) and R script (Analytic Script.r)

Here it starts to fall down a bit.

I would be nice if the code-interpreter sandbox could run R as well as Python, so here it gives generic advice on the R Script and dependencies. It does say the R Script is "well-structed and commented" but I'll leave it to you dear reader to see if this is true.

Its attempts to extract text from Relevant Materials.pdf seems to have mostly failed though sadly.

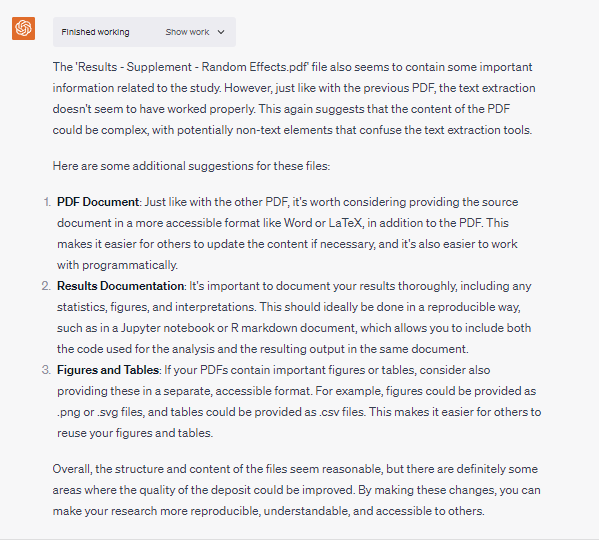

Finally, it tries to look at the last PDF file (Results - Supplement - Random Effects.pdf) in the others folder.

Again, it struggles and mostly fails to extract text from the PDF.

The general advice it gives is fine though strikes me as quite demanding and few researchers ever release documentation and data of that level of openness and reproducibility.

In an ideal world, if a dataset contains all the things, it asked for and the script was in Python, it might be possible for GPT4 to check for inconsistencies. For example, it could cross-check the output of a raw file after running a preprocessing script, followed by the analytical script to see if the final data lines up.

It could compare the figures and tables produced (particularly if available in markdown) to see if it lined up with the final data etc....

Another potential use that I did not try out here is to use such tools to anonymize data or at least flag personally identifying information for obvious reasons.

Limitations of GPT4 and Code Interpreter

As you try it out more you will notice many limitations of this feature.

First and foremost, GPT4 and the code sandbox has no access to internet. Why is this an issue? The code sandbox given to GPT4 has some preinstalled python packages but not all and not even all the most common ones. So, you can't install new packages, update existing ones etc.

Worse off, GPT4 itself is not given knowledge of its own python environment nor does it know it can't access the net.

So, for example, when you ask it to do a task such as text sentiment, it might confidently decide to use Spacy to try to accomplish the task and it will correctly try to pip install it. Normally this will work, except OpenAI has blocked GPT4 from installing additional python packages from the internet and it will fail.

This confuses it, and it will instead try an alternative approach. Sometimes I will see it try multiple approaches before finally hitting on an approach that uses preloaded dependencies and does not need to install additional components from the net! This makes me feel so sorry for it.

Other limitations as already mentioned is that it can only run Python and not other languages and there's a maximum limit of 100mb of files uploaded, though you can skirt it a bit by uploading a zip file.

Lastly, any files you upload get purged by the system very quickly. It is too much to expect the data files you upload to persist between sessions but even within the session my experience is that if you leave it idle a bit, it will "forget" all the datasets you uploaded and you need to reload the files again!

Conclusion

Of the two roles played, I suspect the latter, where they help check research data quality will be of greater use. Self-checking of research data outputs and improvement of data quality of open data is something everyone agrees should be done but is time consuming to do. This seems like the perfect use of AI.

Using AI solely to decide budgeting decisions without any human input strikes me as frail and vulnerable to being out strategized by publishers who have the advantage of superior resources in these areas.

That said due to the potentially confidentiality of research data, this use will become more popular as Large Language Models become more amenable to be run locally on researcher own machines. Such checks should ideally be done by the researcher on their own machines before they submit the research data to repositories but there might be an additional check at the research data repository point.

For Librarians, I think this gives me even more reason to keep my Python skills sharp or at least maintain a base level of skill so that I can understand what GPT4 is doing. If you don't know any Python, now is the time to start!

Further sites and pages to see what others have done

Latent Space podcast - Code Interpreter == GPT 4.5 - very long article and podcast transcript, worth reading to get into the weeds.

Ethan Mollick - as usual ahead of the curve on showing what you can do with code interpreter

Additional details

Description

I've been a subscriber of OpenAI's ChatGPT plus for a while though I have been struggling to justify it to myself for a while until recently.

Identifiers

- UUID

- 3709b861-1ba6-49be-9e2c-85bb0332afb1

- GUID

- 164998120

- URL

- https://aarontay.substack.com/p/gpt4code-interpreter-playing-electronic

Dates

- Issued

-

2023-08-06T20:18:00

- Updated

-

2023-08-06T20:18:00