Why use of new AI enhanced tools that help with literature review should be discouraged for undergraduates

I have a controversial and perhaps somewhat surprising (to some) view. I believe that New AI enhanced tools that help by substantially doing the literature review itself should not be used (or encouraged to be used) by undergraduates particularly freshman with little or no experience doing literature review the normal way.

While this might sound like gatekeeping but I think there are real and serious reasons to consider this viewpoint.

Try this argument, just as we don't let kids use calculators before they learn arithmetic, we shouldn't let undergrads who don't have experience doing research at all, jump to using ai tools that essentially do or does large parts of the literature review for them.

Even this analogy understates the case. Unlike calculators which are mature tools that we fully understand and know how to work with, the current new "AI tools" that are used to help with literature review are newly emerging and cutting edge tools that everyone even experienced researchers are trying to get to grips with it.

So a better analogy would be making novice use the very first prototypes of electronic calculators!

We certainly do not want novice to use such tools!

A more nuanced position

As a sidenote, I know in certain quarters I might be seen has someone who blogs a lot about such tools and promotes hype, a view that I think is not without merit as I can be overly enthusiastic about the potential of such tools and have invested a lot of energy to test and understand such tools.

I am certainly not in the "Resist gen AI" camp, who totally object to Gen AI applications for reasons such as ethical concerns relating to copyright or environment impact. While I do share pragmatic concerns about them e.g. bias, inaccuracy, I do think that experienced researchers who are forewarned can learn to use these tools with great value. (See also later discussion on answer engines & whether such technologies should be used in search engines)

What people might not realize, is that I study these tools because I am curious and to try to be prepared for the future and write my thoughts and findings in this blog for fellow librarians and in my day job, I only talk about and show these tools to people who have some research experience such as Phds and research students.

I believe that only users who already know how to do research the usual way are more primed to understand the weakness of such new tools. They are generally knowledgable and mature enough to not take such tools at face value. They have enough experience to be able to spot what is a good literature review and what is a bad one and more importantly typically have enough domain knowledge in at least one area to test the AI tool on...

Without such foundational knowledge it can be hard to appreciate what the issues are....

While it is possible that in the future a time will come where educators know how to work with and teach such tools to even novices but for now, I would say many of us are still figuring out such tools...

What follows is a more detailed accounting of the different type of AI tools that assist with literature review and why I think they can be very tricky even dangerous to use if you don't have some background in doing formal literature review already.

Main types of "AI" in literature review search

But "AI" is a broad term, it could encompass in theory search engines like Google, Google Scholar and traditional databases, surely I am not objecting to novice researchers using them right?

To further develop this argument, we need to talk about the typical ways in which "AI" or more specifically how the improved natural language proccessing and natural language generation capabilities from Transformer based models are effecting literature review tools.

1. "Answer engines"

These are essentially search engines that use retrieval augmented generation (RAG) to extract and generate answers with citations directly from relevant papers/text chunks found. Some have called these "answer engines" instead of search engines because instead of just showing links to documents or papers that might be relevant, it instead attempts to extract and paraphrase answers from the top results found.

As covered in this blog - they are extremely common now. Many people would be aware of general web search answer engines like Perplexity.ai, Bing Chat, You.com and more. Even OpenAI's chatgpt, now has a mode (initally dubbed SearchGPT) called ChatGPT search uses search.

Below shows an example of ChatGPT with search turned on.

Similarly on the academic search side, we have academic answer engines from startups such as elicit.com, Consensus.ai, Scite.ai assistant and many more. While legacy traditional academic search such as Scopus AI, Web of Science Research assistant, Primo Research Assistant and many more are following suit. (See my list here)

Above shows a typical academic "answer engine" - Primo Research Assistant. I won't belabour how RAG works, as I have written a lot about this in this blog.

Similarly, I have written quite a bit about how tricky such tools are to use, chiefly how even though RAG ensures the cited paper isn't fictional,it might still misattribute or misinterprete the paper, such that the generated statement is still not properly supported.

I typically like to refer to the preprint - Evaluating Verifiability in Generative Search Engines or the brief report from HKUST - Trust in AI: Evaluating Scite, Elicit, Consensus, and Scopus AI for Generating Literature Reviews to support these points.

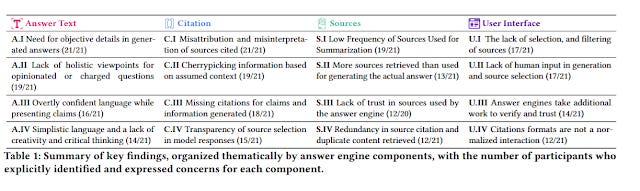

A more recent paper - Search Engines in an AI Era: The False Promise of Factual and Verifiable Source-Cited Responses provides a formal study on some of the issues of answer engines by letting experts in specific domains use answer engines, asking them to critique the answers generated and classified them into different issues relating to answer text, citation, sources and user interface.

Granted this was done over generalist web search answer engines like Perplexity.ai, Bing Chat/Copilot and you.com and a few of the iissues identified might be less serious if they used a academic answer engine (for example - "S.III Lack of trust in sources used by the answer engine (12/20)" would definitely be lessened by say Scopus AI that only cites top indexed journals and/or Scite.ai assistant which retrieval is weighted towards high citations papers), most of them apply & are likely not new to you if you have been paying attention to this class of tools.

Hardcore arguments about use of AI/LLM in Search

Beyond such specific concerns on the higher level there are concerns that information retrieval as a field is rushing to embrace LLMs into search without fully understanding the implications.

The 2021 paper - Rethinking search: making domain experts out of dilettantes probably started the hype on using LLMs as answer engines (though it suggests moving away from retrieval as a seperate step) but received pushback from papers such as 2022's Situating Search (and the 2024's Envisioning Information Access Systems: What Makes for Good Tools and a Healthy Web?) which question not just the technical issues but also on whether such systems even if working are what is desired by searchers and for the importance of different types of systems to serve different searcher needs.

A lot of these arguments are made by the camp that is chiefly around Emily Bender who together with others e.g. Timnit Gebru are famed for the paper - On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? Besides some of the drama that went about around the paper that I won't go into, this paper also popularized the term "Stochastic Parrot".

While this paper popularized the phrase, that paper only spends less than a page to discuss why they call it a parrot. I find Bender's earlier paper - "Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data" is a much better articulation of why she thinks LLMs like GPT3 are just parrots with no understanding (e.g octopus test) of meaning.

But even that paper reads to me less definite than the way people are throwing it around. It seems to only say that LLMs trained with predict the next token task can't actually learn to understand because there is no reference to the real world. But she goes on to suggest training on data that is grounded might work , giving examples like "dialogue corpus with success annotations.." or photos with annotations. But some of this is I believe very smiliar to how modern LLMs like GPT3.5 and 4 are trained supporting multi-modalities, using supervised fine tuning and RLHF which are annotations by humans ranking answers.

In my view arguments about search+AI fall into the following categories

1. Arguments that do not assume RAG stating these "chatbots" (withour RAG) don't give citations to check

2. Argumenta that "answer engines" are not the right tools all the time - Agreed, you don't always want a single answer, sometimes you want to explore , learn etc.

3. Arguments about how RAG systems might be misused e.g People won't check citations for accuracy and paradoxically the more accurate they are the less we will check

4. Arguments about how LLMs don't really "understand".

5. Arguments about how LLM is environmentally unfriendly and or are biased, toxic etc.

Point 1 is of no interest to me as I feel RAG systems are clearly the only ones worth discussing if we want to talk about search and it totally invalidates the "no citation" point.

Point 2 I agree that you don't always want a direct single answer, and there are varying information needs, but why do we jump from this agreement to "therefore we should never use answer engines"? Why can't we learn to use different tools?

Point 3 Is not really a fundmental point and it is more of an emperical question, do people check citations from RAG? What is their accuracy rate really and is it very different from a human (do they have the same kinds of misinterpretions)? Could very careful use and careful design of UI to aid users help mitigate issues. But at this point, it's too early to tell.

Point 4 I feel is closer to a definition problem and as noted above I am not sure an argument that says LLM trained on predict the next token or fill in the blank tasks cannot "understand" holds when current LLMs go beyond that by training on more grounded data

Point 5 I feel is an interesting one. Though environment impact issues are I feel hard to pin down and likely the cost will drop anyway.

2. AI writing tools

Another type of tool that I rarely cover on my blog but is slowly gaining popularity are AI writing tools such as Keenious, SciSpace AI writer, Jenna.ai and even OpenAI's GPT4o with Canvas. As you will see later, in some sense these tools are even more tricky.

Typically these tools can generate text and citation based on what you have already typed. More importantly, you can highlight some text you want and ask the AI to help you expand the section, explain more or less, or basically anything you can ask in natural language.

Most interestingly, you can select some text and ask for a suitable citation!

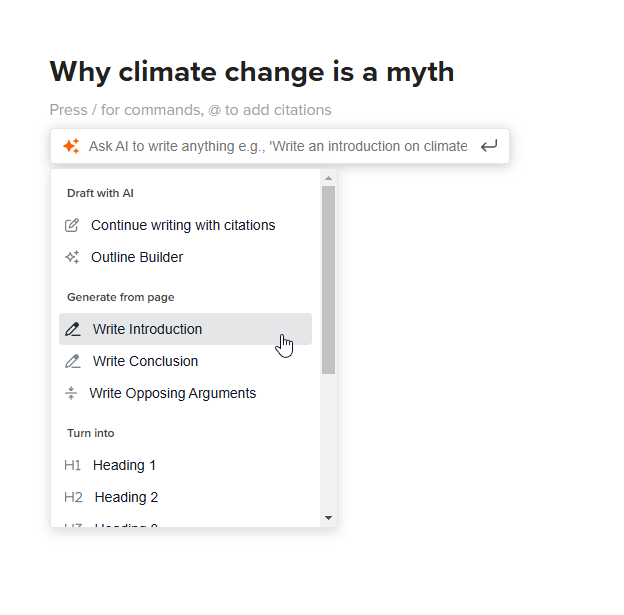

Below is me using SciSpace AI writer (Jenna.ai is somewhat similar), where I typed out a sentence and asked it to write an introduction.

Not happy with anything it has written? You can highlight the parts and use default options to

After generating even more text using the AI writer features "continue writing with citations".

Are the citations generated fictional/fake? It depends on the system, many are using a variant of retrieval augmented generation, so the citation made is definitely real but as always the claims in the generated text might be wrongly misattributed to an existing paper

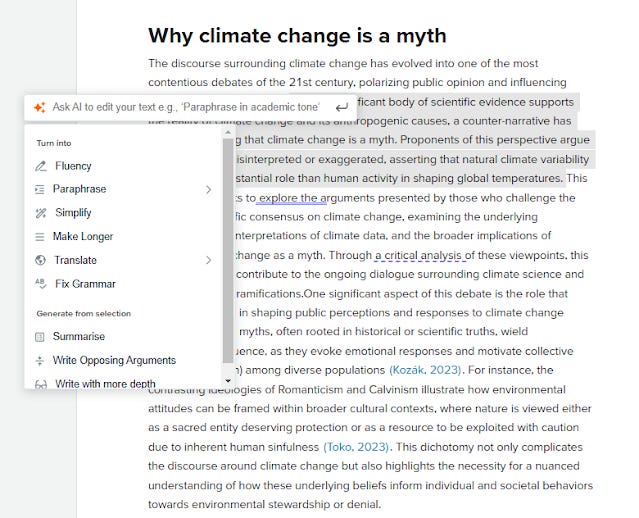

I then highlighted text that I was not satisified with and used the default options to make it longer. I could have also types in natural language how I wanted that section to change.

In the example below, I highlighted a sentence and asked for suggested citations from SciSpace

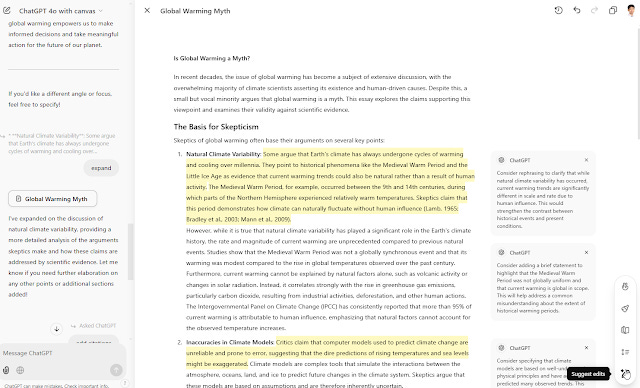

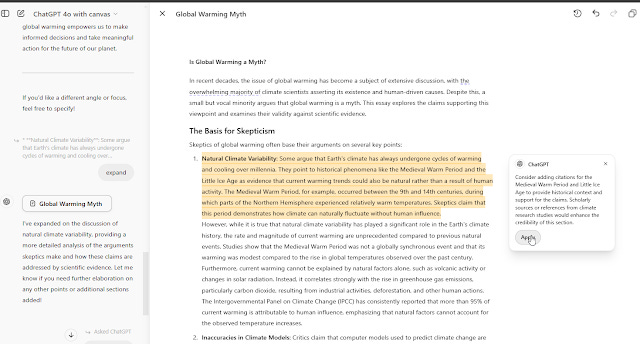

Recently OpenAI's introduced a Canvas feature, which can be used in a similar way, where you can highlight chunks of generated text and ask ChatGPT to amend it however you wanted.

More interesting, you could even ask ChatGPT to "suggest edits", and like a human editor adding comments to text chunks, it would suggest things to do and if you agreed you could click on each comment and select "apply" and it would make these changes.

Similar to SciSpace Ai writer, you could of course also ask for selected text to have suitable citations added.

Why are these tools tricky to use or even bad?

One of the major issues of these tools is that they do automatic citation recommendations. More specifically they do what the technical literature calls "local citation recommender" as opposed to "global citation recomender".

The former recommends citations based on the citation statement or text in the writing while the latter will recommend citations based on other similar papers. Some of you may recognise that tools like Connected Papers, ResearchRabbit etc fall into the later group.

"Automated citation recommendation tools encourage questionable citations" a 2022 paper first sounded the alarm. (See also blog post - Use with caution! How automated citation recommendation tools may distort science). They write

the aim of Citation recommendation tools is to automate citing during the writing process. They directly provide suggestions for articles to be referenced at specific points in a paper, based on the manuscript text or parts of it. These suggestions are based on the literature, or the tools' coverage of the literature to be precise, rather than the researcher's catalogue.

They constrast this with "paper recommendation tools" which are tools which

based on specific search terms, the tools recommend relevant literature that covers these subjects. These papers are usually collected with the intention to subsequently be read, either in full or at least in part.

I have argued that their definition of "paper recommendations tools" seems to suggest search engines only and is unnecessarily narrow and should be expanded to also what the literature calls "global citation recommenders" that can recommend papers based on "seed papers" (or known relevant papers).

I won't summarise the whole paper but they claim heavy use of citation recommendation tools may lead to encourage questionable citations due to

Perfunctory citation and sloppy argumentation

Affirmation bias

They also worry about a new Matthew-effect due to algorithmic suggestions and the lack of transparency of such algos.

I am less swayed by worries of these last two issues because current status quo is no better as algos of popular tools like Google Scholar are totally unknown and the worries about a new matthew-effect bias can be lessened as long as there are wide variety of recommender tools. none of which dominate, each with a different algos (or a way for users to select different options).

However, I am certain the worries of Perfunctory citation and sloppy argumentation and affirmation bias due to using such tools are valid. For sure, when I was working with undergraduate freshman working on their first papers, they approached their essay in a way such that they would decide their argument first and then hunt for references to support it! I remember students telling me that a certain piece of work must exist showing the exact point they wanted to make even though they had never seen it before.

To be fair while even experienced researchers occasionally do it and put in a claim in a sentence before retrospectively looking for a citation, they typically already know such a paper exist and just forgot the exact reference. Also while you can always search Google Scholar to find the paper that supports the point you want, AI writing tools that recommend citations makes it way easier to find what you need.

In fact, I have found that if you are determined enough such AI tools can help you even find citations that support the statement the earth is flat, or climate change is a myth etc.

Even if that was not an issue, trusting the AI's summary of a paper is something that is quite dangerous to do without checking as the AI might be subtly or totally off.

3. AI search tools with improved relevancy or recommend papers based on your seed papers or use semantic search and agent-like search tools

I have talked about what I call citation based mapping services like Connected Papers, Resarch Rabbit, Litmaps which use citation based or network based matrix to suggest similar or possibly relevant papers to "seed papers" you input using citations.

Below shows an example of how Connectedpapers recommends papers based on a single paper I entered as seed papers. The algo calculates a similarity metric based on co-citations and bibliographic coupling.

I do not see any concerns if researchers even novice ones use such tools as a supplement to keyword searches. (except the bias of such tools for finding more highly cited papers).

Similarly using engines that use semantic based search using dense embeddings (or hybrid search with sparse embeddings) as opposed to lexical keyword searching are in principle at least from point of view of use, no different from pure standard keyword based search engines.

While some doubt if transformer based models e.g. BERT type models truly "understand" language, I see no practical objections for researchers of all levels to use it either (except for concerns of transparency as they are more blackbox in termsog understanding why a document is matched compared to keyword searches)

Of course, all the above assumes you are doing a narrative systematic review. Systematic review would have more concerns about transparency.

So far, the tools I have mentioned are in principle no different from using a traditional search engine or database like Google Scholar at least from the user point of view (despite the fact that how they work under the hood is different).

Finally we come to latest trend of agent based search.

Agent-based search like Undermind, PaperQA2 are different though.

They run multiple iterative searches including citation searching, adapting to what was found.

This is a computationally expensive process and take several minutes to run but typically give you a far better quality search result (both in terms of recall and precision).

I am in two minds about use of such agent based search by novices. These results can be very very good and it is extremely easy to use because the iterative searching reduces the importance of the keyword used in the input. On the other hand, over reliance of this type of tools could be dangerous if you never end up learning to do literature review yourself.

Why it is not so easy to walk away

Of course, it is not easy for educators and librarians to refuse to recommend or teach gen AI tools. As librarians we feel the pressure to justify our existence and to add value by guiding users in hot new areas.

Besides, a very dominant view point is that these tools are already out there. Even if we don't recommend academic AI search tools, users are already using Perplexity, Bing Copilot etc. Not to mention our existing database vendors and academic search engine vendors are determined to push these features out.

In such a world, where everyone has access to these tools, we would be doing a dis-service for us not to try to train our users to use them properly.

Of course telling people that such tools exist is the easy part. Studying them deeply enough such that you understand such tools and being able to teach them is a totally different story.

Additional details

Description

I have a controversial and perhaps somewhat surprising (to some) view.

Identifiers

- UUID

- a673097f-7ff4-4e06-922a-1d9b5290a773

- GUID

- 164998085

- URL

- https://aarontay.substack.com/p/why-use-of-new-ai-enhanced-tools-that

Dates

- Issued

-

2024-11-18T18:34:00

- Updated

-

2024-11-18T18:34:00