The rise of the "open" discovery indexes? Lens.org, Semantic Scholar and Scinapse

In 2015, Marshall Breeding the guru of Library technology and discovery summarized the state of Library Discovery in a NISO White paper entitled "The Future of Library Discovery"

He described the state of play at the time, in which index-based discovery services were "entirely dominated by commercial providers" - listing the usual suspects of Summon (owned by Proquest), Primo (Exlibris before acquisition by Proquest), Ebsco Discovery Service and Worldcat Discovery Service.

In a fascinating section entitled "Open Access Global Discovery Service or Index", he discussed the possibility of "the creation of new index-based discovery services based on open source software and

an open access index".

Lamenting that while open alternatives for other aspects of Library technology such as open source discovery interfaces, Library Services Platforms, Knowledge Bases were starting to emerge, the possibility of a "open access" central index did not seem likely to be in the future.

His analysis of the difficulties particularly in terms of technical complexity still probably holds though in the four years since he wrote this, yet we see the rise of many new discovery indexes that are build wholly or partly (e.g. Dimensions) on open data drawn from many sources - chief among them Crossref and Microsoft Academic Graph(MAG).

While Breeding is right and such indexes might be inferior to current commercial offerings (though one of them is trying to argue otherwise) in that these development covers only metadata and hence lack full-text (though even that is slowly changing due to rising levels of open access) that the big four commercial indexes have build up after years of partnerships (not to mention the lead from years of refinement ), these new discovery indexes still provide a interesting hint that prospects for a community based Library open discovery index isn't as hopeless as it seems today.

In this blog post, I will talk specifically on a very important source of data used by Academic Search engines - Microsoft Academic Graph (MAG) and do a brief review of four academic search engines - Microsoft Academic, Lens.org, Semantic Scholar and Scinapse ,which uses MAG among other sources.

Rise of the open article data

In past posts such as "Of open infrastructure and flood of openly licensed data - are they good enough?" (or this updated 2019 talk), I noted the increasing availability of open metadata including

Title/abstract/author (e.g. Crossref, Datacite, Pubmed)

Author information (ORCID)

Subject headings (e.g. Pubmed, MAG)

Affiliation (ROR)

References (e.g. Crossref, OpenCitations, Wikicite)

Relationships between entities such as datasets to articles

Altmetrics (e.g. Crossref events data API)

Full text (e.g. JISC CORE, PMC)

OA status (e.g. Unpaywall etc)

In an earlier blog post, I blogged on the impact this has had on Science Mapping tools but of course the more obvious use of such metadata is to construct discovery indexes and indeed, we see an increasing number of indexes that have started to emerge circa 2018, that combined these sources including Lens.org, Scilit, ScienceOpen, etc.

Among the various sources, besides the trio of Crossref/DataCite/ORCID and Pubmed/PMC (for life sciences), a major player that is shaking things up in this space is the entry of Microsoft's Microsoft Academic Graph (MAG).

While the search engine Microsoft Academic which is Microsoft's answer to Google Scholar is a very interesting search engine in it's own right, what is most impactful about the project is their decision to make all the data (MAG) that their bots have crawled and harvested - open (licensed ODC-BY) and free!

To understand the significance of this, remember that researchers have been asking for Google Scholar data access via API for ages to no avail, and Microsoft goes ahead to release all the data they have collected for free (or minimum sum if you use Azure for hosting)!

But all this would be of no interest if MAG was limited in scope and indeed it is not. While through studies on large scale comparisons of the scope and completeness of Microsoft Academic data vs other sources are still sparse (though see this), there is little doubt that Microsoft Academic is one of the largest and richest source of open academic article data out there.

Relationship between Microsoft Academic Services (MAS), Microsoft Academic Graph and Microsoft Academic Knowledge Exploration Service (MAKES)

In a recently published article entitled "A Review of Microsoft Academic Services for Science of Science Studies" authored by the Microsoft research team, we get a look into the goals of the Microsoft Academic Services team as well as details of the system they are building.

The article is hard to summarize but Microsoft Academic Services (MAS) is a project by Microsoft Research which aims to support researchers following the GOTO principle (Good and Open data with Transparent and Objective methodology), using state of art, machine learning to extract knowledge from papers at scale.

They explain their efforts consists of the following parts

Microsoft Academic Graph (MAG) - The open dataset of Scholarly metadata extracted from the web by Microsoft Crawlers

Microsoft Academic Knowledge Exploration Service (MAKES) - "a freely available inference engine" which I gather does among other things network semantic reasoning and "reinforcement learning system to learn a probabilistic measure called the saliency" which is used in rankings

Microsoft Academic - The search interface itself that shows the results from combining the first two components.

The paper is very technically dense and my limited knowledge of machine learning probably means I don't fully understand it . But let me try anyway.

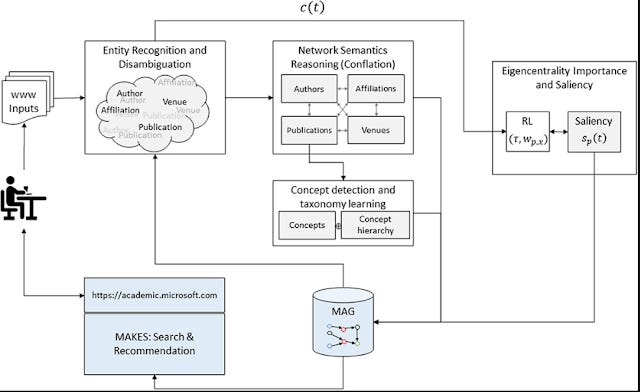

Below shows a high level view of two feedback loops in the whole MAS system.

The paper goes in depth on how MAS does entity recognition , automatic concept extraction (in hierarchy called "Field of study") using "Network Semantics Reasoning" for conflation - "which is to recognize and merge the same factoids while adjudicating any inconsistencies from multiple sources. Conflation therefore requires reasoning over the semantics of network topology.."

The second major component of the system involves reinforcement learning to estimate a measure they called "saliency" (and the related "prestige" which is size normalized) which seems to be somewhat similar to importance/impact/relevancy.

About Saliency

"In a nutshell, we use the dynamic eigencentrality measure of the heterogeneous MAG to determine the ranking of publications. The framework ensures that a publication will be ranked high if it impacts highly ranked publications, is authored by highly ranked scholars from prestigious institutions, or is published in a highly regarded venue in highly competitive fields. Mathematically speaking, the eigencentrality measure can be viewed as the likelihood that a publication will be mentioned as highly impactful when a survey is posed to the entire scholarly community. For this reason, we call the measure the saliency of the publication. Similarly, one can derive the saliency of an author, an institution, a field, and a publication venue as the sum of all saliecies of their respective publications (provided all authors contribute equally to the publication)."

Saliencies are also adjusted for the fact that older papers (and other entities see later) have an advantage and the system learns how to adjust for this using reinforcement learning.

"Because older publications have more time to be widely known, eigencentrality measure can have intrinsic bias against new publications. To adjust for this temporal bias, we impose saliency to be an autoregressive stochastic process such that the saliency of a publication will decay over time if it does not receive continuing acknowledgments, or its authors, publication venue and fields are not maintaining their saliencies. To estimate the rate of the decay, we employ a machine learning technique, called reinforcement learning, to adapt the saliency to best predict the future citation behaviors. This is possible because MAG has been observing tens of millions of citations each week, which we take as the feedback from the entire scholarly community on our saliency assessments. These observations turn out to be adequate in quantity for the reinforcement learning to arrive at a stable estimation of the unobserved parameters in the saliency framework. For more technical details, please look out for our scholarly paper on this subject." https://academic.microsoft.com/faq

I struggle to understand this saliency but I think the intriguing thing is they calculate saliency of not just papers but other entities such as authors, journals etc.

"MAS uses a mixture model in which the saliency of a publication is a weighted sum of the saliencies of the entities related to the publication. By considering the heterogeneity of scholarly communications, MAS allows one publication to be connected to another through shared authors, affiliations, publication venues and even concepts, effectively ensuring the well-connectedness requirement is met without introducing a random teleportation mechanism"

Saliency is stated to be a eigenvector centrality measure and this has been tried with Journal level metrics like Eigenfactor and SJR but according to the paper, it is hard to do with article level metrics because the graph isn't well connected and people usually solve this problem with a "Teleportation mechanism" when say 15% of the time, it randomly jumps to another node (equivalent of someone who randomly decides not to follow a citation trail but uses a search engine to randomly find another paper).

MAS's saliency instead solves this by using a heterogeneous network , where nodes are not just publications, but also other entities like affiliations, authors, publication venues, and concepts are included, which makes the graph more well connected.

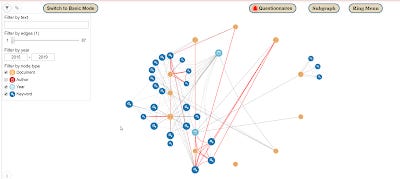

In a sense this reminds me of the tool I was playing with in my last blog post.

A heterogeneous network of not just publications but also authors, subjects etc.

By calculating salience scores for not just publications but also other entities in the node, this helps with ranking and search query completion when you type words in Microsoft Academic.

Also as mentioned already they "avoid treating eigencentrality as a static measure", and use tens of million citations every two weeks in reinforcement learning as signals where the "goal of the RL in MAS is to maximize the agreement between the saliencies of today and the citations accumulated NΔt into the future."

But is saliency of publications basically the same as total citations? They note that saliency ranks agree with total citation rankings 80% of the time , where the difference of 20% "demonstrating the effects of non-citation factors".

Microsoft Academic Graph - source data for new discovery indexes?

While all this is very interesting, our main focus in this blog is the open source of data dubbed Microsoft Academic Graph (MAG).

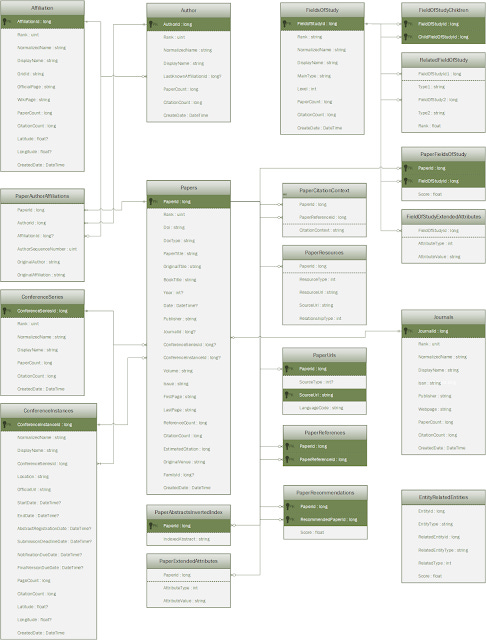

Below shows the Entity Relationship Diagram of MAG below for a more complete over-view of what is available and how they are linked together., but essentially you have entities such as publications, authors, affiliations, field of study, publishing venues such as journals and conferences and citations.

https://docs.microsoft.com/en-us/academic-services/graph/reference-data-schema

The site at http://ma-graph.org/ notes that the data is available via a variety of ways including

RDF dump files

Linked data format

has a publicly accessible SPARQL endpoint

But let's look at why some people are excited about MAG.

As I write this in Dec 2019, it boasts 230 Million publications (though note that "publications" here is used loosely, only 37% are categorized as articles, 2% conferences, 1% book chapter) indexed from Bing. Similar to Google Scholar, because it uses a crawler approach it can find papers on not just journal sites but also repositories , author personal home pages and tries to aggregate different versions together.

Besides size of index, compared to Crossref data, it also has a lot of metadata known to be in-complete or unavailable in Crossref.

One of this famously is references, due to hold-outs on open citations but Microsoft Academic bypasses a lot of this problems as they scrape the data directly like Google.

"In addition to the references, which are links on the document level, for a fraction of all papers, the MAG also contains the sentences in which the citations occur"

Besides references, abstracts are also quite plentiful in Microsoft Academic. Ludo Waltman cites Bianca Kramer's analysis where only 14.9% of Crossref items have abstracts while Lens.org (which includes Crossref, Microsoft Academic and Pubmed) has 60.7%

One other major area of interest for purposes of comparison is affiliation data, and this isn't well covered in Crossref data, but MAG has such data (though there are questions about reliability of it compared to other established sources like Web of Science)

Another major issue about using open metadata is that while you can often find author supplied keywords in open metadata such as Crossref, getting controlled vocabulary across all the articles isn't really possible given the huge number of articles out there.

This is where topics or what MAG calls "field of study" comes into play. Starting from a top level of 19 field of study (e.g. Medicine, Chemistry, Business), it arranges topics into a hierarchy of several layers deep.

Using ML techniques , it generates multiple field of studies for each paper and assigns them to the papers automatically. As noted by this blog post it is able to tag papers about artificial intelligence even if the paper doesn't mention that phrase

I'm not aware of any analysis on the appropriateness of this auto-assignment, but it seems to pass the first smell test (see later).

Discovery indexes that use Microsoft Academic

Clearly next to Crossref, Microsoft Academic - MAG is a very importance source of open scholarly data and I am increasingly hearing how it is integrated in various Scholarly communication systems and tools due to it's usefulness.

Take the recently announced Unpaywall Journals. it's a tool which helps libraries predict how much they can save by cancelling journals and relying on Open Access and ILL/DDS allows you to weight downloads by institutional citations and authorship. Would it surprise you to know that those two data comes from MAG?

Microsoft themselves claims over 180 projects that use MAG, though most are one off research.

In this blog post though I'm going to focus on just one specific use of MAG - as a source for academic search engines.

Microsoft Academic Lens.org, Scinapse, Semantic Scholar

Of course, the main and perhaps most updated Academic search engine using MAG, is Microsoft Academic itself, which I have previewed here , once when it was in beta and another time when it had a fairly big interface update.

You may not be surprised to know that there are at least three other search engines that also use MAG data as a source for their search index. They are namely Lens.org, Semantic Scholar and Scinapse.

But why use them as opposed to the original Microsoft Academic? After all the other academic search engines are likely to be updated more slowly than Microsoft Academic itself.

Differences in sources covered

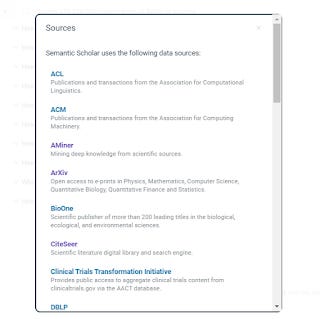

Well the first reason is that other academic search engines can mix and match sources - see below.

Sources making up Lens.org , Scinapse and Semantic Scholar

For example even in terms of just publication data Lens.org includes publication data from not just MAG but Crossref and Pubmed, while Scinapse draws from Springer-Nature's SciGaph, Pubmed and apparently Semantic Scholar.

Semantic Scholar itself just earlier this year announced that it had started using MAG as well, on top of the other other sources and partners it already had.

Some Semantic Scholar partners

Differences in interface - precision searching vs AI

Astute readers will probably point out that MAG with over 200 million publications is probably as comprehensive as they come and adding more sources probably won't help much.

The other major difference in these search engines is you can take advantage of the different user interface available.

No single interface can be everything for every type of user so this is where you might choose to a different search engine even if the data is similar depending on what you are looking for.

While search engines may have unique features, the basics generally involve typing in a keyword and doing filtering to get at the result.

But even this area some use cases require features that support precise advanced searching and there are few use cases that require this more than users doing systematic reviews.

I'm not a medical librarian so my understanding of systematic reviews are limited, but essentially you need search engines with powerful filtering so you can exhaustively search to find and rule out papers that do not meet your specific criteria for inclusion into the systematic review.

Ideally, you want to search a big index so you don't have to do multiple searches but the bigger the index, the more results you going to get back, so this has to be backed up by very powerful and precise search functions that allow you to efficiently scope and drill down to what you need.

Tests of search engine features

Advanced power users particularly those doing precision searching tend to need the following features.

Firstly the search should support advanced search syntax including Boolean operators, truncation, proximity , combined with the use of parentheses (nested) to control order of operation and have no (or as little as possible) limits for character limits for the search statement for starters.

This feature criteria alone disqualifies Google Scholar.

Even if such features are documented as supported, you also want to test to ensure there are no surprises in how the various search operators work.

Many search engines such as Primo, Summon in theory support a lot of advanced search functions but as librarians who have worked with such systems know, they work oddly once you started doing complicated searches such as chaining Boolean operators.

Mailing lists for such product periodically light up with questions such as why does "X NOT Y" not get fewer results than "X OR Y" .

Besides having a big index, you also want to be able to search using field searching and on top of that use controlled vocab so you can be sure you are searching and matching what you want and not missing anything because you entered the wrong keyword.

Looking at all this requirements, one can see quickly that Pubmed/Medline (whether via NLM interface or Ebsco/OVID) is closest to meeting these requirements. Most critically all Medline articles are tagged with MESH headings allowing you to do very precise searches if you know MESH.

But other there others?

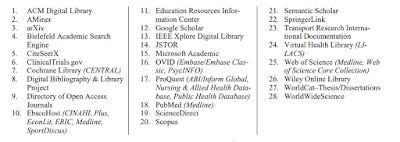

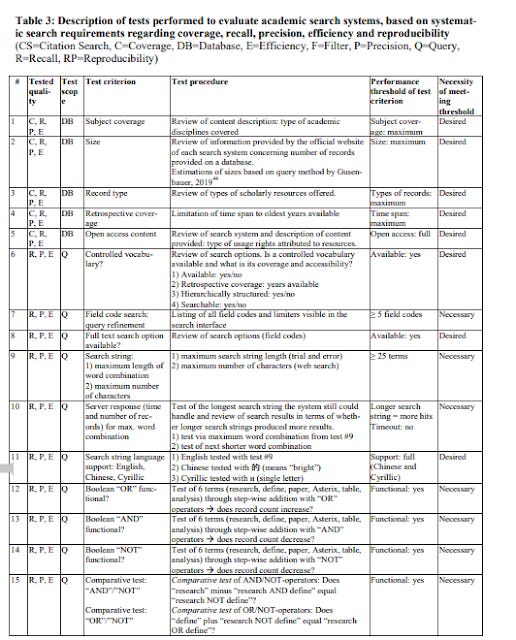

In the amazing paper - Which Academic Search Systems are Suitable for Systematic Reviews or Meta‐Analyses? Evaluating Retrieval Qualities of Google Scholar, PubMed and 26 other Resources

, the authors review a lengthy list of features of 26 academic search engines to study this very issue.

They list 27 tests to help determine how well the search supports systematic reviews. These tests not only look at the availability of features such as truncation, bulk export, post filtering but in many cases recommend reasonableness tests to check if the search results make sense and are reproducible!

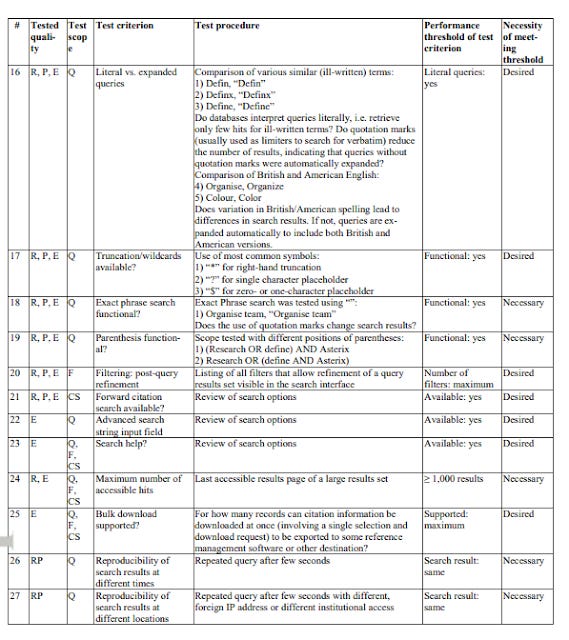

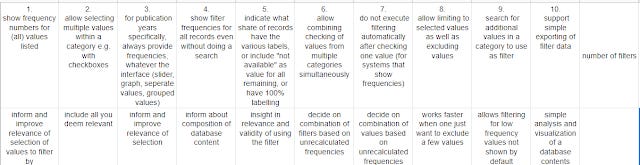

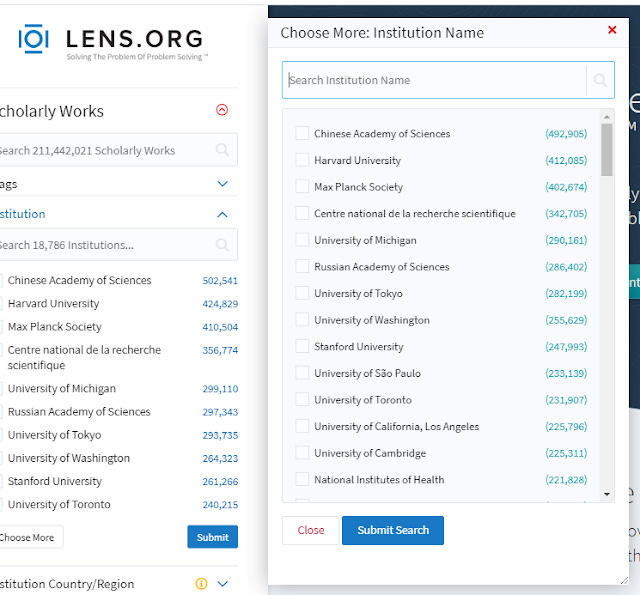

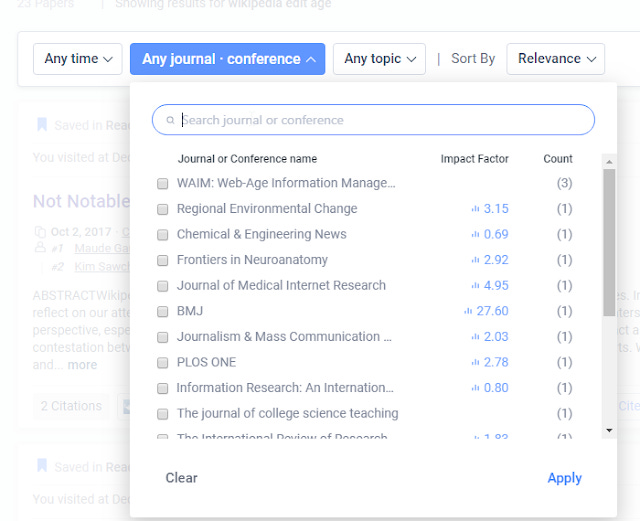

In a similar vein but with a more specific focus, Jeroen Bosman, of innovations in Scholarly Communication fame recently released a google doc - Scholarly search engine comparison focusing in-depth on comparing Scholarly search engines with regards to filtering functionality.

His test has 10 criteria relating to filtering with a 11th field recording number of filters.

While some of the features, he listed are fairly common such as "show frequency numbers for (all) values listed" or " allow selecting multiple values within a category e.g. with checkboxes", others are pretty rare but extremely useful if you want to do efficient filtering in big datasets e.g. " allow limiting to selected values as well as excluding values" and " search for additional values in a category to use as filter".

For example, say you did a search and it gave you 2,000,000 results, and you want to filter down to a certain affiliation. Most search engines would list only the top N affiliations(where N might be as little as 20 or as many as 100).

A better search engine such as Lens.org would allow you to search for matches in affiliation, so even if the affiliation you looking at has only one entry, it could be found and filtered.

Search Facet values in Lens.org

Searching is also important because sometimes you are not sure the exact value of the facet value you are looking for. e.g. affiliations might be slightly different, so you might want to do a search for one word rather than the whole phrase to see what matches occur.

Lens.org is the best by far for precision searching

Taken together, a search engine that rates highly on the two mentioned tests would give the searcher a very controlled and precise search.

I was totally not surprised that Lens.org came up on top with nearly perfect scores in Jeroen's test on filter features.

10 best practices for filters / faceted search implementation in (scholarly) search engines. It seems there is quite some room for improvement, e.g. in allowing selection of multiple values and making sure frequencies shown always add up to the total https://t.co/cgmaAws1zt. 1/3 pic.twitter.com/9QwGXpNRsX

— Jeroen Bosman (@jeroenbosman) December 12, 2019

I have blogged often on how powerful Lens.org features are, but still I was surprised when I tested the criteria listed for doing systematic reviews in the earlier paper (Lens.org was not included in that study, though Semantic Scholar and Microsoft Academic was), Lens.org passed all the tests too.

Though it was a touch slow for very big set of results.

Another advantage for Lens.org is it incorporated two different systems of controlled vocab - MeSH (from Pubmed) and Field of Study (from MAG) combined with a very powerful search interface with advanced field search, post filters wrapped around with state of art visualization features.

Filtering in Lens.org by MeSH, Field of Study and more

Here is a relatively simple search in Lens, using Field searching.

Field searching in Lens.org

Whether you are using Lens.org for finding big sets of papers for literature review or doing bibliometrics for organizations, Lens.org is one of the most powerful search engines in the world, with practically any feature you can think of for searching. And this is without even talking about it's patent searching capabilities.

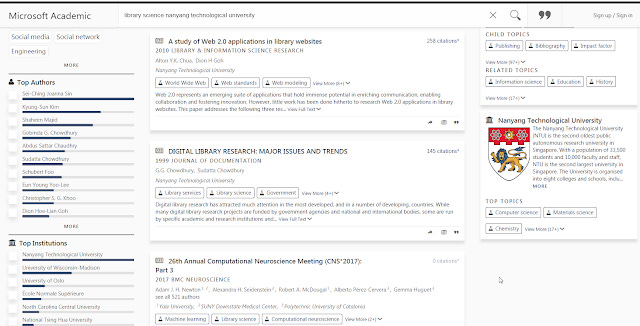

Microsoft Academic - the other extreme - semantic search

That said, many users will find Lens.org intimating with all the search filters (Joeron counts 18!) and for many users such a tool might come up as too "librarianish"

On the extreme end, we have Microsoft academic, while it has a decent set of filters compared to Google scholar, the amount of filtering you can do for each facet is limited.

Microsoft Academic typical search page

Interestingly Microsoft Academic has responded with a tweet, which if I read correctly suggests that unlike most search engines which list facet values by order of frequency, they rank with "relevance" (Salience?)

If filters are to "inform and improve relevance", frequencies are not the right thing to show because of weak correlation to relevance and gullibility. Our filters are thus decorated and ranked with relevance measures as described in https://t.co/kL29lMZTRm.

— Microsoft Academic (@MSFTAcademic) December 12, 2019

In any case, the filters available are still better than Google Scholar and on par with most big academic search engines but there is a twist here.

Microsoft Academic has no support of even basic Boolean OR operator

Microsoft academic has zero support of Boolean operators, and by this mean I don't mean advanced ones like Proximity, truncation but even simple ones like OR which even Google Scholar supports. Add the limited character string support for search query and you are wondering what is going on here.

While it is no surprise Microsoft academic is not going to be a tool for full blown advanced searching, the lack of support of support for the most basic Boolean that GS supports is mind blowing.

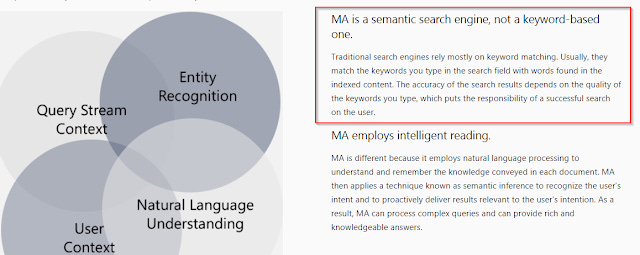

Then again Microsoft academic has always been billed as a "semantic search" tool and this isn't just marketing.

As noted in the FAQ, you shouldn't be entering keywords (though it seem to work) but you should be paying attention to the query suggestions

Before shows the query suggestions as you type.

https://academic.microsoft.com/faq

All in all Microsoft Academic is a very different type of search engine/ discovery index build around semantic search , while Lens.org is a traditional power search tool.

My impression is the other two discovery indexes lie in between with Semantic Scholar closer to the Microsoft Academic end of the spectrum followed by Scinapse.

I'll end by briefly mentioning the unique features of Semantic Scholar and Scinapse.

Unique features of Semantic Scholar

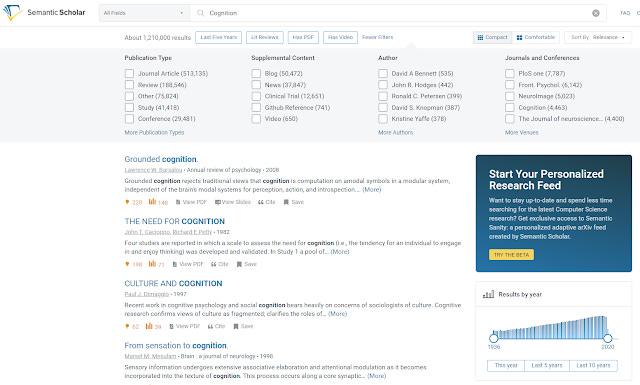

Semantic Scholar is a search engine backed by Allen Institute for Artificial Intelligence. With the tagline "cut through the clutter", it was launches in 2015 and aims to use AI technology which "analyzes publications and extracts important features using machine learning techniques."

I'll talk more about their unique innovations later, but how is this related to MAG? Up to October 2019, it's indexed covered only mostly Computer Science and Life Sciences using data obtained from various partners. But since then it has announced a partnership with Microsoft and with the use of Microsoft Academic Graph, the index has exploded to over 170 million records.

The data seems to be available as GORC: A large contextual citation graph of academic papers at https://github.com/allenai/s2-gorc/

I've recently blogged about Semantic Scholar, so I will just mention the highlights.

As the name semantic suggests like Microsoft Academic it has the ambition of using AI/ML to help understand the meaning of the papers it is indexing.

In one way it does something very similar to Microsoft Academic (automatic hierarchical subject tagging), in another it does something totally different (citation context).

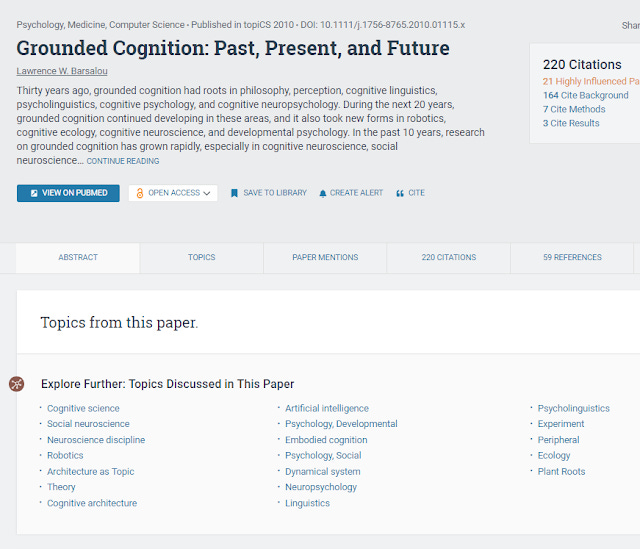

Automatic hierarchical subject tagging - Topics vs Fields

Because Semantic Scholar now uses MAG, it includes filters by Fields (MAG's Field of study - where it uses only the top level 15 fields). It supplements this by their own "topic" tag which like Microsoft's Field of study is automatically generated from the full text.

Topics in Semantic Scholar

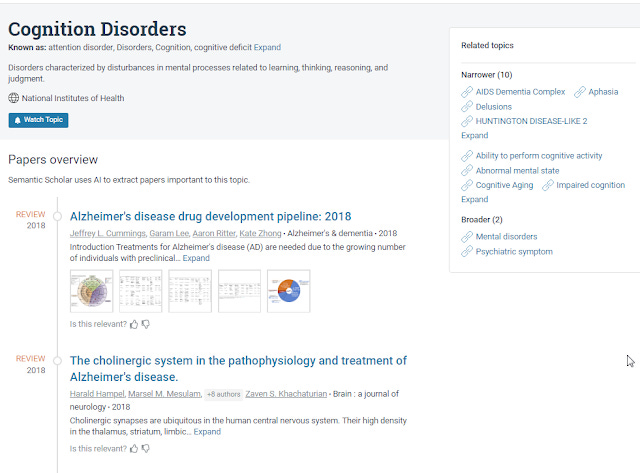

Similar to Field of study these topics are hierarchical and you can explore to narrower or broader levels.

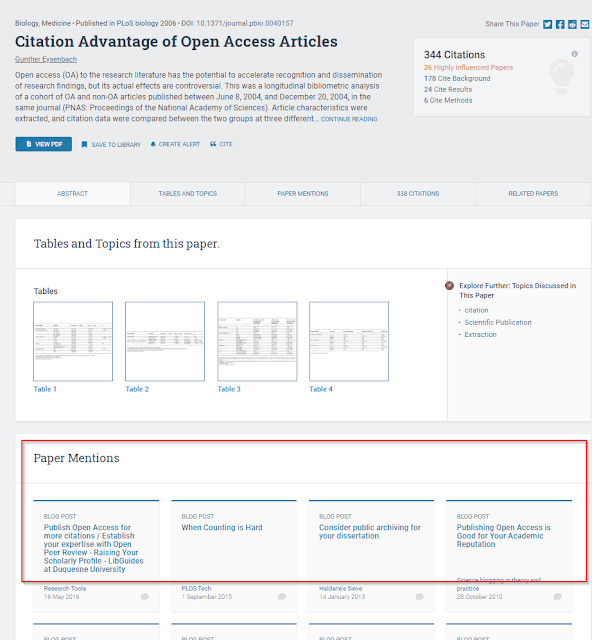

Citing context - Cites/results/method/background

Recently there has been a lot of interest in citing context, not just whether a cite occurred but the context in which it did.

Scite is one of those leading the charge, trying to use Machine Learning to determine if a cite is a "mention" vs "supporting" vs "contradicting".

The other way to think about citation context is to think in terms of the reason for citing. In Semantic Scholar, it tries to determine if a cite is a cite for background, a cite of the method and a cite of the results.

Clearly this feature can be very useful if a paper has a lot of citations and you want to narrow down to citations that are important or impactful such as cites of methods or results.

On top of that, they also tag citations from papers that were considered to be "highly influenced" by the cited paper.

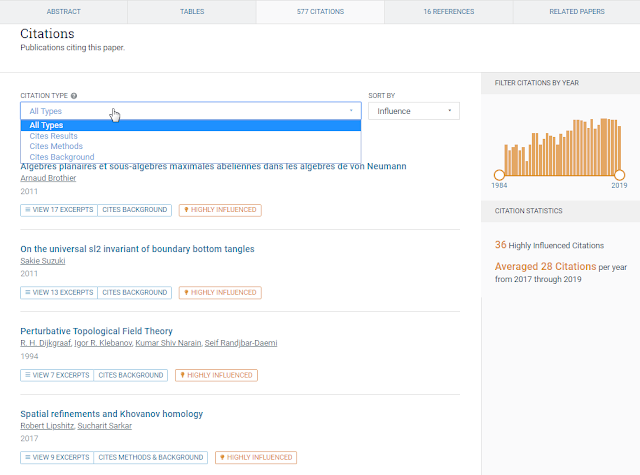

Finding related supplementary material - blog posts, slides etc.

As they draw from sources such as Pubmed, Microsoft Academic etc this means a flood of non-publication material , so it's a nice touch that they have supplemental content (e.g. video, news, clinical trial) as a separate filter rather than lumping them as a publication type filter signalling they are treated differently which they indeed do.

Filters in Semantic Scholar

They try to relate each of these supplement materials to the traditional publication so for each item detail page you can see a supplementary material section that allows you to look at slides, clinical trials, slides associated with the paper

"Mentions" section in Semantic Scholar that finds links to supplemental material - blog posts, news articles, slides etc.

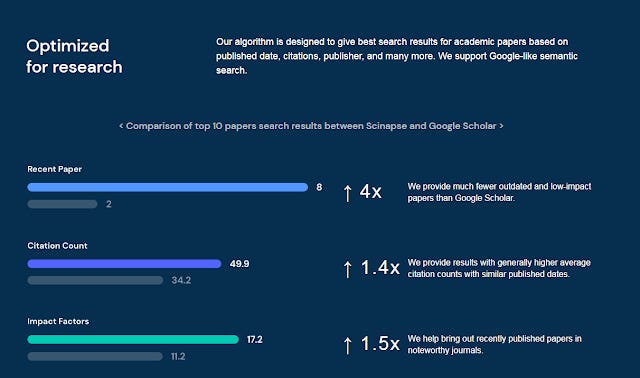

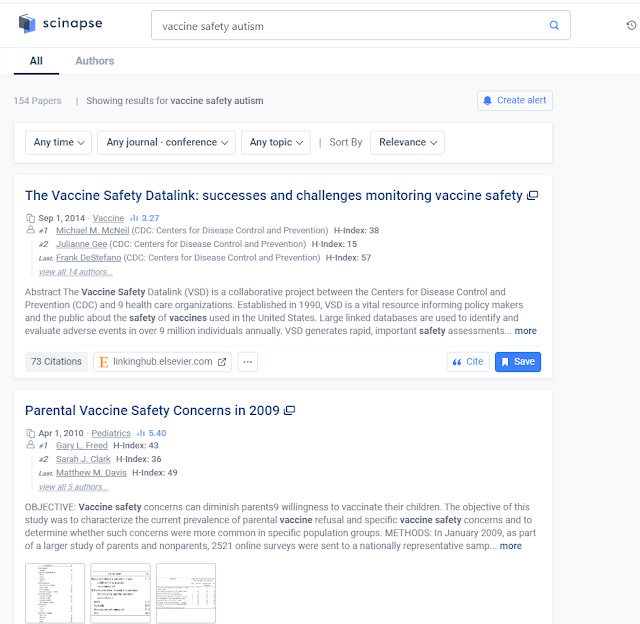

Unique features of Scinapse

I was alerted to the existence of Scinapse, a discovery index couple of months ago.

With the statement "We're better than Google Scholar. We mean it." , it naturally drew my interest.

As evidence they produce the following

Pluto Network based in Seoul, South Korea is the team behind this non-profit service. I have vaguely heard of Pluto Network in the context of block chain technology for academia applications but the Scinapse FAQ notes that it is not built on block-chain.

The FAQ claims 200 million publications with over 300,000 visitors a month.

Scinapse features

Scinapse has pretty typical features for services of this class. You enter the keyword and can filter by time, by journal title, topic (actually uncontrolled keyword) etc.

Filters in Scinapse

They encourage you to create accounts to add papers into collection, you can create alerts and it will provide recommendations.

The main thing that caught my mind that is somewhat unusual is it's focus on showing H-index metric for authors as well as journals.

Typical Scinapse search result

It's unclear to me how useful these metrics are, particularly for authors where the data is unlikely to be that clean.

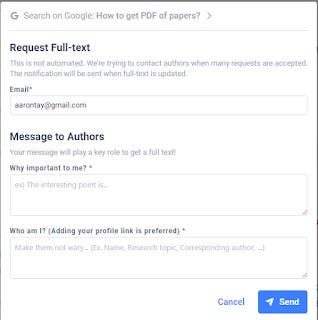

Scinapse is also able to detect corresponding author and the Scinapse page suggests that "for papers that need permission to grant access or approval, Scinapse will request paper access directly from the author/s." , yet when I try that option I see this warning.

Scinapse request full-text feature

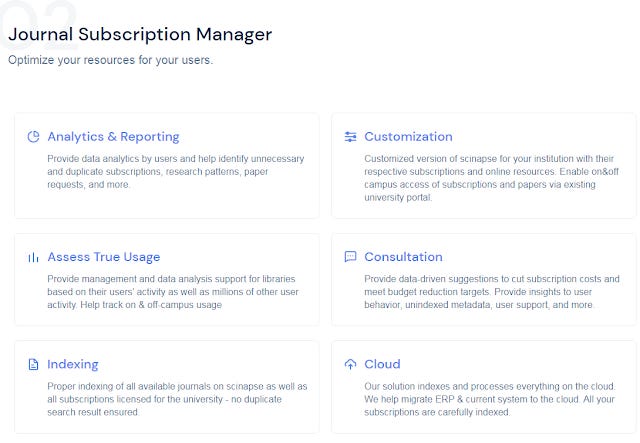

Scinapse for institutions

One of the more interesting things, I noticed about Scinapse is there is a institution version!

There is even a comparison table against EDS, Primo, Summon , with Scinapse supposedly coming on top in terms of deduping.

Some of the other pros are kinda true. For example , EDS, Primo, Summon generally don't have affiliation. Primo and Summon , sort of have citation count but only if you have subscribed to Web of Science, Scopus.

Scinapse on the other hand, as it's own metrics for not just citation counts for articles, but also H-index and impact factors for journals. Not sure if that is important.

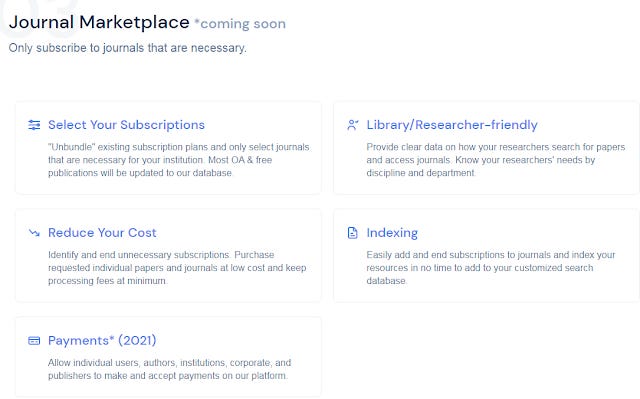

On top of that , Scinapse for institutions also comes with a "Journal Subscription Manager" and "Journal Marketplace" service which looks like some sort of Knowledge base and analytics systems, similar to the eresource resources part of Intota.

Conclusion

We live in a time, where large (>50 million) Scholarly discovery indexes are no longer as hard to create as in the past, thanks to the availability of freely available Scholarly article index data like Crossref and MAG.

It will be interesting to see how this all shakes out, as people figure out how to innovate.

Additional details

Description

In 2015, Marshall Breeding the guru of Library technology and discovery summarized the state of Library Discovery in a NISO White paper entitled "The Future of Library Discovery"

Identifiers

- UUID

- 7058b333-15ba-4038-ad49-ec64aca8df74

- GUID

- 164998283

- URL

- https://aarontay.substack.com/p/the-rise-of-open-discovery-indexes

Dates

- Issued

-

2019-12-22T21:08:00

- Updated

-

2019-12-22T21:08:00