ParrotGPT: On the Advantages of Large Language Models Tools for Academic Metadata Schema Mapping

Picture, if you will, the labyrinthine world of academic information management, where metadata schema mapping serves as a vital underpinning for the exchange and intermingling of data across diverse platforms and systems. This arena has long been dominated by the venerable metadata schema crosswalk, which, though serviceable, has begun to show its age. The traditional method of creating metadata schema crosswalks is a time-consuming and monotonous task that requires significant coordination. Enter the digital behemoths known as large language models (LLMs), whose unparalleled prowess in understanding and generating the complex tapestry of human language has opened up a new frontier in metadata schema mapping. I think LLMs present a far superior alternative to the traditional crosswalks of yore.

The LLMs have undergone significant improvements and can perform scholarly metadata mapping with minimal input. In a previous blogpost, I showed that with a short prompt, the LLM behind ChatGPT can convert metadata from Crossref schema to DataCite schema with great accuracy and even metadata enrichment. Among the multiple GPT-3 models available, the "Davinci" model stands out as the most powerful and efficient at this task. Compared with other GPT-3 models such as ADA and Curie it performs way better in terms of metadata transformation. "Davinci" has been trained on different datasets, which makes it capable of handling various metadata schema mapping. This level of precision is challenging to achieve with crosswalks, which require a considerable amount of manual input to create and maintain.

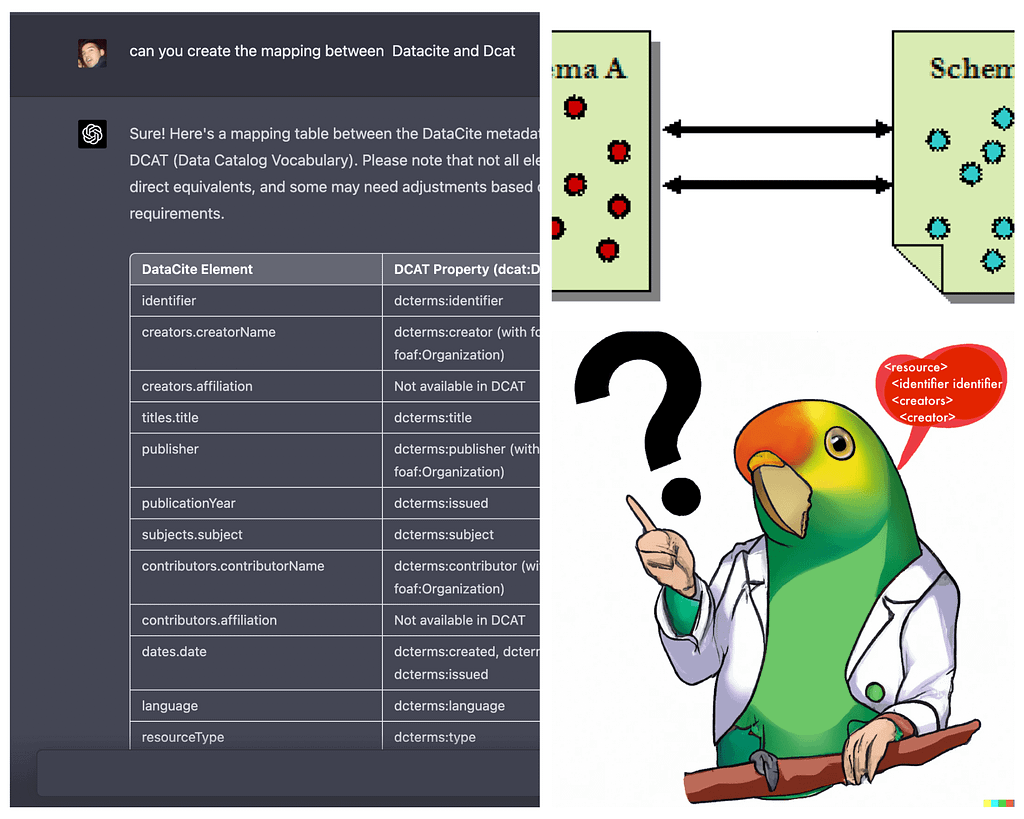

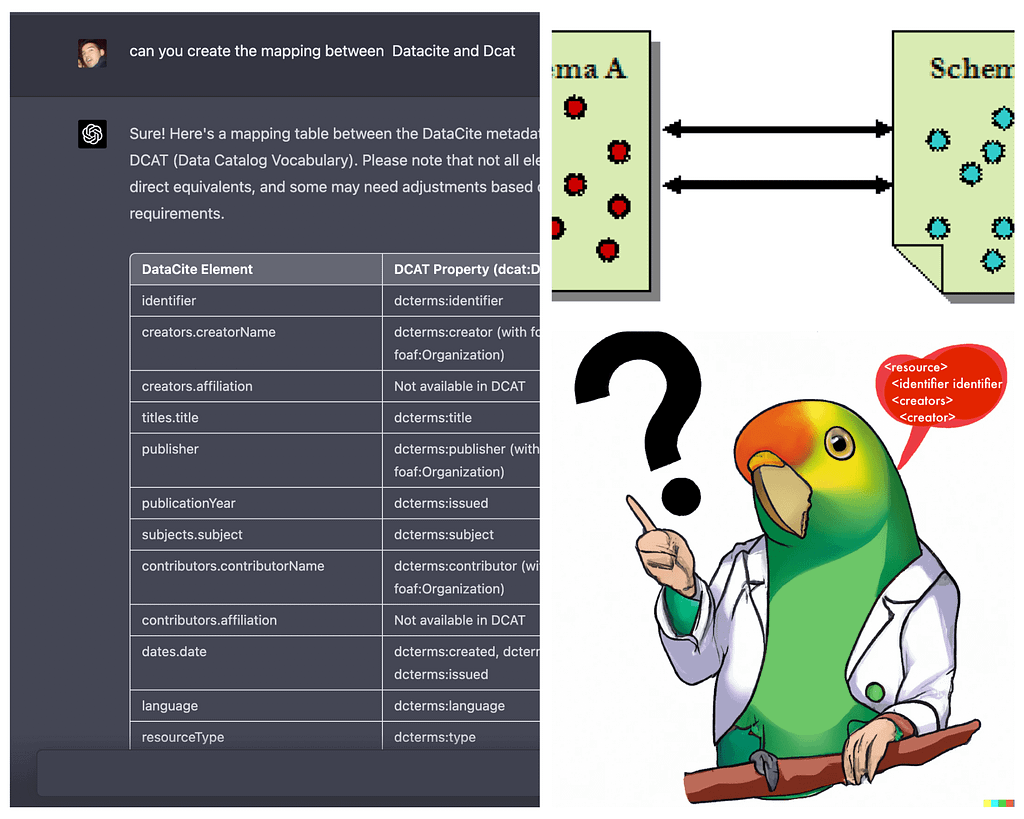

Furthermore, the newly released GPT-4 model is more accurate and can perform tasks that previous GPT-3 family models will give up on performing. For example, while mapping metadata is a task that all GPT-family models can perform with different degrees of accuracy, generating a crosswalk of two schemas is a task that the GPT-3 family models will reject performing. GPT-4 models do perform that task with good results when compared to expert crosswalks. Below you can see how the GPT-4 generates a crosswalk between DataCite Schema and schema.org (type ResearchProject) schema.

Tools to interact with Large Language models

In an effort to harness the full potential of all the GPT models for metadata schema mapping, I have taken it upon myself to develop the Python package, Parrot-GPT. This tool utilizes the formidable GPT-3 models to effortlessly translate metadata from one schema to another, all the while obviating the need for the manual creation and upkeep of crosswalks, thus bestowing upon its users the gift of time and resource savings. As of this publication, I am working on incorporating the new OpenAI Chat API syntax for GPT-3.5 and 4. Contributions are welcomed.

Continuous Adaptation in the LLM's Realm

Consider the crosswalk, which, much like an aging, inflexible cartographer, finds itself unable to evolve with minimal intervention. In stark contrast, the LLM-based mapping is a veritable chameleon, gracefully adapting to new schema properties and maintaining accuracy in its ever-changing environment. Furthermore, Parrot-GPT can even generate metadata for new schema properties, making it a truly versatile tool for metadata management.

Parrot-GPT can also be used to generate metadata from new schema properties. In an experiment, I created metadata with new fields and translated metadata from one schema to another. The goal of this experiment is to demonstrate the ability of the LLM behind Parrot_GPT to correctly map metadata fields from one schema to another, even when new fields are introduced that were not present in the original schema.

To begin the experiment, a metadata file was created with a new field that did not exist in DataCite Schema, but an analogous field is present in the DCAT schema. Then Parrot_GPT was then asked to convert the metadata file from DataCite Schema to DCAT schema, with no prior knowledge of the mapping between the two schemas. For the new field, I chose "distribution", a field that currently does not exist in DataCite schema 4.4, but it will be included soon in version 4.5. I took the example from the examples that DataCite is preparing for the new schema release

Parrot_GPT can be run directly from the CLI specifying the file to transform {-mf}, the input schema {-i}, and the output schema {-t}. I transformed this DataCite Schema 4.5 metadata file in the example below to the DCAT schema. Input and output files can be found in github.

python -m parrot_gpt.cli -mf tests/example-distribution.xml -i datacite_xml -t DCAT > output.rdfhttps://medium.com/media/0aba7c99e765a6d471f6ab9ca18d8f73/href

The results were promising, demonstrating that LLM mapping can handle new schema properties with minimal intervention. The new field "distribution" was correctly mapped to the DCAT class distribution; all sub-properties were also correctly mapped. Interestingly the LLM chose to map DataCite contentUrl property to the DCAT accessUrl property, which one could argue is not the most suitable candidate. A few more interactions of the same experiment show that the model would choose the downloadUrl property on certain occasions instead. This raises a question about the model variable decision-making. Nevertheless, it is feasible to fine-tune this kind of issue with model fine-tuning. Overall this capability makes LLM mapping more flexible and adaptable than crosswalks.

I think the experiment supports the effectiveness of using LLMs for metadata schema mapping, as they can handle new and unexpected metadata fields with minimal additional input or intervention. We need to explore whether further fine-tuning can improve this general model. These capabilities make LLM mapping a highly scalable and efficient solution for managing metadata schemas in academic institutions and other organizations that deal with large volumes of data.

The diminishment of the LLMs drawbacks

Despite its advantages, using LLMs for metadata schema mapping has some drawbacks. One such drawback is the limited accuracy of LLMs when dealing with complex and nuanced metadata schemas. Human intervention may still be necessary to ensure accuracy, as we saw in the experiments. On the other hand, creating metadata schema crosswalks can be a tedious and manual process that requires continuous updates to ensure accuracy. Additionally, crosswalks may not be as scalable as LLMs in handling the proliferation of new schemas and their fast-pacing evolution.

Large language models, particularly state-of-the-art models like "GPT-3", require substantial computational resources to run. This may present a barrier for smaller institutions or those with limited budgets. In contrast, traditional crosswalks can be created and maintained with significantly less computational power. However, recent developments have made LLMs-based services truly affordable. While writing this article, Microsoft Azure just announced Azure OpenAI Service providing REST API access to OpenAI's powerful language models, including the GPT-3, Codex, and Embeddings model series, with very affordable prices. This very month, the inference of Facebook's LLaMA model was published in pure C/C++, allowing anybody to run a model of similar capabilities to GPT-3 directly from a MacBook.

In conclusion, allow me to reiterate that the utilization of large language models for mapping academic metadata schemas represents a transcendent method when compared to the creation of metadata schema crosswalks. Through the development of the Parrot-GPT Python package, we have unlocked seamless schema mapping, continuous adaptation to new schema properties, and the generation of metadata for novel fields. The results of this experiment serve as a testimony to the myriad advantages of using LLMs for metadata schema mapping, thus making a compelling case for their adoption in the hallowed halls of academic information management systems.

The dawn of this new metadata mapping era, fueled by large language models, sparks immense enthusiasm. As we explore these innovative technologies more deeply, I'm eager for a future where academic information management is more connected and streamlined.

Originally published at https://kjgarza.github.io.

Files

10.59350-hs9k1-wn031.pdf

Files

(823.9 kB)

| Name | Size | Download all |

|---|---|---|

|

md5:9137343d5cb7d6fadb5e7b8e626675dc

|

12.3 kB | Preview Download |

|

md5:a0d320a399170c99599f08800f5f2aca

|

811.6 kB | Preview Download |

Additional details

Description

Picture, if you will, the labyrinthine world of academic information management, where metadata schema mapping serves as a vital underpinning for the exchange and intermingling of data across diverse platforms and systems. This arena has long been dominated by the venerable metadata schema crosswalk, which, though serviceable, has begun to show its age.

Identifiers

- UUID

- 89ac43b1-6cfd-4f81-9e43-509a904bba9e

- GUID

- https://medium.com/p/434cceabc68b

- URL

- https://medium.com/@kj.garza/parrotgpt-on-the-advantages-of-large-language-models-tools-for-academic-metadata-schema-mapping-434cceabc68b

Dates

- Issued

-

2023-03-22T08:06:46

- Updated

-

2023-03-22T08:06:46