Why open alone is not enough - Microsoft Academic discontinued & Semantic Scholar withdraws hosting of "Open access" papers

In the last month, there were two interesting developments that caused quite a stir in my twitter feeds (see discussions here and here).

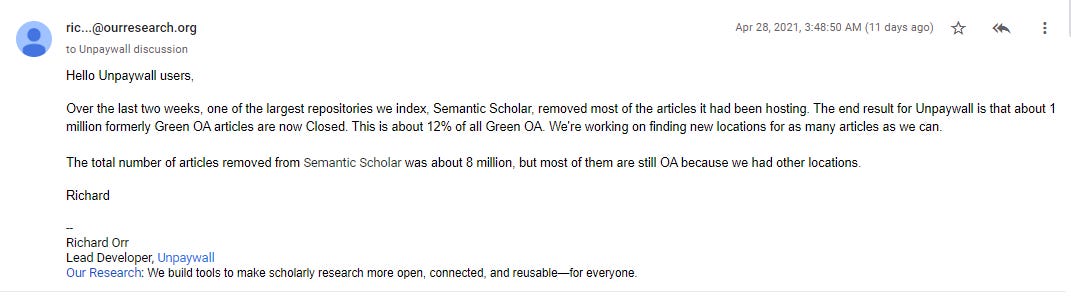

Firstly, there was an interesting announcement on the Unpaywall mailing list, that Unpaywall had detected that Semantic Scholar which was one of the biggest repository sources they were tracked had removed most of the articles it was hosting.

Unpaywall stated that Semantic Scholar removed over 8 million papers, but at the time of the posting, Unpaywall had alternative locations for most of the papers hosted but there was still over 1 million paper that they could not find alternatives to and hence 1 million papers that used be classified as Green OA in Unpaywall was now closed.

Message by Unpaywall about removal of papers hosted on Semantic Scholar

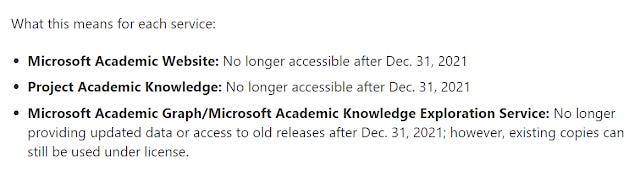

Secondly this news, was followed by a even bigger announcement by Microsoft Research that they were discontinuing Microsoft Academic.

In a somewhat confusingly titled blog post "Next Steps for Microsoft Academic – Expanding into New Horizons" , Microsoft Research announced that

"The automated AI powering MAS will be supported until the end of calendar year 2021, upon which time MAS will be retired."

This includes not just the Microsoft Academic search website but also the data underlying it dubbed Microsoft Academic Graph (MAG) .

While the seriousness of the loss of 1 million unique Green OA is easy to grasp, many might not be aware on the significance of Microsoft's announcement. While Microsoft Academic as a search did not have much success in making in roads into the hearts of researchers, the data , MAG it released under an open license increasingly underlies more and more workflows and systems.

Some examples include

Search and citation index systems that blend multiple open sources e.g. Lens.org , Scinapse and Semantic Scholar

Misc systems that use MAG's affiliation data and/or citation counts among other information e.g. Overton, Unsub, scite, Exlibris Esploro

On the surface both developments do not have much in common, beyond the fact both are blows to availability of open access and open Scholarly metadata respectively.

However one thing strikes me. Both the open access papers (but as you will see later Semantic Scholar had reasons for closing the papers) that were closed by Semantic Scholar and the MAG data that is being discontinued by Microsoft were available via open licenses, however this did not mean the lack of continuity did not hurt.

This of course points to the fact that while open licenses are good to have they are not sufficient and the importance of having and investing in sustainable open infrastructure.

And this is why in recent years, they have been increasing talk on investing in Open Infrastructure, not just to ensure continuity but to also ensure such Important Scholarly infrastructure cannot be easily co-opted by commercial interests.

The day Unpaywall lost 1 million Green OA papers

Interesting announcement in @unpaywall mailing list today. 8 million "green oa" copies removed from Semantic Scholar one of the largest repositories they indexed. So far 1 million formerly Green OA articles are now closed representing 12% of all Green OA https://t.co/9DsqkrCBFn

— Aaron Tay (@aarontay) April 28, 2021

On April 28th, Richard Orr a developer with Unpaywall , posted on the mailing list a quiet announcement that over the past few weeks they detected Semantic Scholar removing most of the papers that they used to be hosting.

While repositories or even journals removing papers is not unheard of, this was not just any repository.

Unpaywall (which regular readers of this blog know is currently the defacto standard used to determine open access status of papers) stated that Semantic Scholar was one of the biggest repositories of open access papers they are tracking and this move removed over 8 million OA papers.

Of these 8 million, 7 million were available at alternative locations, which meant there was an overall loss of 1 million OA papers which Unpaywall could not find alternative sources and as such the status of these papers would move from Green OA to closed.

An alternative way of seeing this is to say among the sources Unpaywall harvest from including journals and repository sites, Semantic Scholar was hosting over 1 million unique copies!

Given that there are roughly 29 million plus unique OA papers tracked by Unpaywall, 1 million isn't totally insigificant.

Semantic Scholar is a unusual source

Semantic Scholar by Allen Institute for Artificial Intelligence was launched in 2015, and in my experience remains still quite an obscure search engine to most researchers.

That said a couple of years ago my collegue who was the institutional repository manager was telling me she knew about Semantic Scholar , basically because when she was googling for free copies of papers by our institution's authors and she noticed many such copies hosted on Semantic Scholar

At the time, Semantic Scholar was limited to the computer science discipline (which our institution is strong in), but neverthless it did suggest to me that it seemed to host quite a bit of unique content.

You might think this is ancedotal but a year ago, I asked an innocent question, on how much unique content does each subject and institutional repository host on average and a couple of researchers including Cameron Neylon and Alberto Martín (see Martin's analysis based on somewhat old data scraped from Google Scholar) attempted to answer this question with the data they had at hand.

Cameron Neylon while analyzing Unpaywall Data dumps, found that leaving aside (Eu)PMC or arxiv, a substantial amount of unique content from hosted on Semantic Scholar.

Ok quick and dirty approach gives me this. Of roughly 15M objects found in repositories, roughly half are found in only one repo that is not (Eu)PMC or arxiv. Semantic scholar accounts for a lot of the remainder tho, not sure what to make of that. pic.twitter.com/r3eQWvxLKx

— CⓐmeronNeylon (@CameronNeylon) June 19, 2020

This surprised some of the experts in the thread and lead to a discussion with Richard Orr on how Semantic Scholar while being classified as "repository" by Unpaywall , is in actual fact, neither repository or journal hosted is a good fit.

Why did Semantic Scholar stop hosting papers?

Of course, the question on everyone's mind was why did Semantic Scholar suddenly stop hosting papers. Unpaywall confirmed that they were told enough to know it was not an accident (privately I was told this was happening as and aside in a private chat a month ago but didn't think much of it) but didn't know why this was happening.

This led to various speculations.

One thing to note is that Cameron Neylon's uniqueness analysis was based mostly on the Unpaywall Corpus, and I believe this excludes data from ResearchGate, Academic.edu etc.

So one idea is that perhaps Semantic Scholar was accidently hosting "Open Access" papers that should not have been made open and they were cleaning things up. For example, might they perhaps be accidently hosting "Grey OA" papers harvested by/from Researchgate?

Response from Semantic Scholar

Eventually Semantic Scholar did respond

We're providing some additional context based on questions from the community. Again, thanks for all of your feedback and input. #OpenAccess pic.twitter.com/mp3CwhxJ9X

— Semantic Scholar (@SemanticScholar) April 30, 2021

Though they did not confirm speculation of ResearchGate copies, the general idea that they were cleaning house by removing copies of papers they could not confirm had proper open licenses seemed to be sound. There are still unanswered questions but for me at least the question on whether they were "pushed" to clean house or they did it on their own doesn't quite matter.

Ultimately I liked the following comment from Roger Schonfeld on the importance of preservation strategies.

Amazing to imagine that as many as 1 million formerly green articles are no longer available open access. I hope preservationists will be giving thought to this as part of collection strategy. https://t.co/rmFZw8djQv

— Roger C. Schonfeld (@rschon) April 29, 2021

While in this particular case, Semantic Scholar was justified probably in removing the content, we can imagine a scenaro where a large or not so large source of Green OA or Gold OA papers collapses. Are we ready for this?

And indeed in the Unpaywall mailing list discussion, the very first response from Bryan a Internet Archive staff, noted that the Internet Archive had this covered.

He also added they had mirrored corpus releases from big bibliometric data sources including

Unpaywall

Semantic Scholar

Microsoft Academic Graph

JISC CORE

Crossref, Datacite and more!

Bulk Bibliographic Metadata - Internet Archive Web Group

I don't do large scale bibliometric research, but this sure looks like a treasure trove of bibliometric meta data as many of these organizations only keep the current snapshots of the data they release.

Microsoft Academic and Microsoft Academic Graph is discontinued calender year end 2021

While my tweet on Unpaywall losing 1 million OA papers , gathered some attention , my tweet on Microsoft Academic ending after December 2021 drew even more attention.

While some in my tweet chain thought this was inevitable, given how like Semantic Scholar, Microsoft Academic as a search engine struggled to make an impression on most researchers who prefered to stick to the excellent and tried and tested Google Scholar, most responding to my tweet lamented the news.

Of course, over the years, my Twitter reach has grown organically to encompass bibliometricians, scholar communication startup founders/consultants , Open Access advocates and yes academic librarians who were well versed in Scholarly communications and the value of metadata - hardly the average researcher! But most of them got the implications of this news.

Why the loss of Microsoft Academic Graph will be a big blow to the cause of open scholarly metadata

While most academic researchers did not flock to use Microsoft Academic the search engine (though there are some fans), the comprehensiveness of the data (not quite rivalling Google Scholar but as close as anything you can get really - see analysis here and here), coupled with the open license, meant that an ecosystem sprung up around using the data dubbed Microsoft Academic Graph in myraid ways.

I've talked in the past few years about the rise of open scholarly metadata (earliest post here (2018), here in 2019 and here again in 2020) but I always had a sneaking feeling a big part of this rise is heavily dependent on Microsoft's unexpected move to make Microsoft Academic data open.

And yes, I have often wondered what happens when Microsoft stops supporting this, a reasonable question to ask since this is from/under Microsoft Research. I remember raising the question of sustainability to someone from Microsoft (education side) many years ago when they came to talk to me about perhaps using MAG data for our research metrics dashboards.

The answer I got doesn't matter anymore (something along the lines of it was a loss leader to get universities into their cloud platform) but it does points to the dangers of commerical control of such systems/workflows. But to be fair to Microsoft Research they had sustained this for 5 years and with open licenses no less!

What about other open sources?

Crossref as a source of open scholarly metadata has always been critical of course and in recent months they have scored unusual successes in getting almost all big publishers including Elsevier to release the reference lists for their articles, I've even called this somewhat grandosely in a blog as the era of open citations.

There is no doubt that the I4OC (Initative for Open Citations) has succeeded far beyond most expectations in pushing publishers to open up reference lists of papers deposited in Crossref.

The OpenCitations organization has used this data to generate their - COCI, the OpenCitations Index of Crossref open DOI-to-DOI citations and this together with data extracted from life sciences sources like Open Acess portion of PMC creates quite a formidable set of open citations.

Does this mean we don't need Microsoft Academic for citations at least? Not quite. Firstly while I4OC has publishers committing to making the references they deposit open, this does not imply that every article they mint dois with have references deposited.

And even if this was the case, Microsoft Academic citations go far beyond just doi items. They track citations of grey literature , in particular preprints. While Crossref has been minting dois for preprints, working papers etc using the "posted content" type for some time now, it is still far from common practice and Microsoft Academic graph citation linkages like Google Scholar's are just so much richer than crossref's ever be.

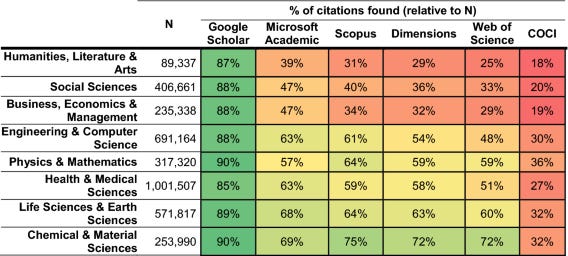

This is particularly in the case of non-STEM fields, while the analysis below was done prior to Elsevier consenting to release reference lists and COCI results will be much better today, it does show you the gap in coverage Google Scholar has over the other indexes in Humanties, Business, Social Sciences, and of the group - Microsoft Academic fares marginally better.

And more importantly, analysis has shown there is not much of an overlap between MAG and other sources.

And if Crossref's citations pale to MAG's , things look even worse for other metadata fields.

Sadly, the number of mandatory fields required to be deposited when doing content registration in Crossref is extremely minimal, basically Title, URL, DOI, ISSN (for the journal) etc and it is no surprise most publishers don't deposit more than the minimal requirements.

Let's take affiliations of authors. While Crossref does allow you to add the institutional affiliations of authors for a while, this isn't mandatory and as such is rarely available via Crossref.

The other problem is even if the publishers borthered to deposit author affiliations they would be just strings and uncontrolled and you would need to clean them up.

There is of course the fledging ROR (Research Organization Registry) that promises to solve this and Crossref started supporting this in 2021, but this is a long way to be a long way off from being universal and is unlikely to be respective.

True, their affiliation/disambiguation data is very valuable. GROBID + CERMINE do a good job of extracting affiliation data from papers and linking to each author in the paper, but linking and disambiguating across millions of papers ... I wouldn't want to do that from scratch!

— Phil Gooch 💙 (@Phil_Gooch) May 6, 2021

Crossref data is perhaps more complete when it comes to authors, but the lack of author disambiguation (ORCID is increasingly a solution but still far from universal) means quality suffers again.

And that's the thing about MAG data, beyond the fact that it's crawling of the web gives it a scope and range (basically all research output even those without dois) that Crossref and similar organizations cannot match, it does a ton of preprocessing, entity recognition and disambiguation to make the data clean and useful. While you can be skeptical about the resulting quality using purely ML methods compared to long established citation indexes like Scopus or Web of Science (which to be fair as the advantage of a ton of human error checking), it definitely is better than nothing.

From using deep learning to disambiguate and rank importnace of authors, venues, institutions, concepts to creating a universal cross-discipline system of controlled voca system - Field of Study , there is nothing close out there to substitute for Microsoft Academic Graph data.

Take the list of options listed by Microsoft Academic as alternatives. Of them

Aminer - does a lot of interesting processing but the dataset is relatively small and discipline specific?

Scopus is proprietary data and doesn't compare in terms of scope

Lens.org - uses MAG as a major source of data

Semantic Scholar - has a partnership with MAG , it's unclear to me how much Semantic Scholar benefits from this (it has other data publisher partners too), but when this was annnounced in late 2018, the number of papers covered by Semantic Scholar surged from 40 million to 175 million items!

As for the rest, we already talked about how OpenCitations relies on a combination of Crossref (COCI) and PMC, and Dimensions relies also on opencitations though it has it's own publisher partnerships to further supplement their data but it is proprietary data like Scopus.

Systems and workflows affected by discontination of MAG

All this sounds a bit abstract, so let's talk systems.

First off, I've blogged about "The rise of the "open" discovery indexes?" and noted the easy availabilty of open scholarly metadata has made it easier to create new citation indexes that blend data from multipe sources.

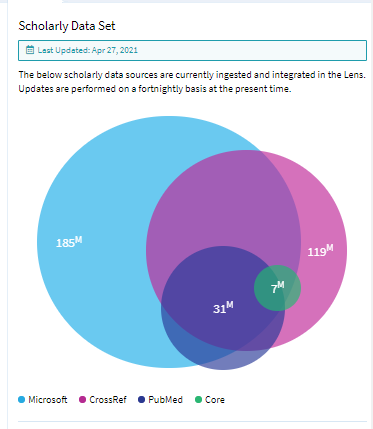

While Crossref data is signficant source, Microsoft Academic Graph is as significant if not more so. For example it is used in Lens.org, Scinapse, Semantic Scholar.

Sources making up Lens.org , Scinapse and Semantic Scholar

Still how significant is MAG compared to other sources in such indexes? Here's Lens.org visualization of the data from major sources Crossref, Microsoft, PubMed and CORE.

Lens.org visualization of overlaps between their major sources of Scholarly work -

As you can see from the visualization above, Microsoft Academic is by far the biggest source of "Scholarly works" and there is a lot that does not overlap with other sources.

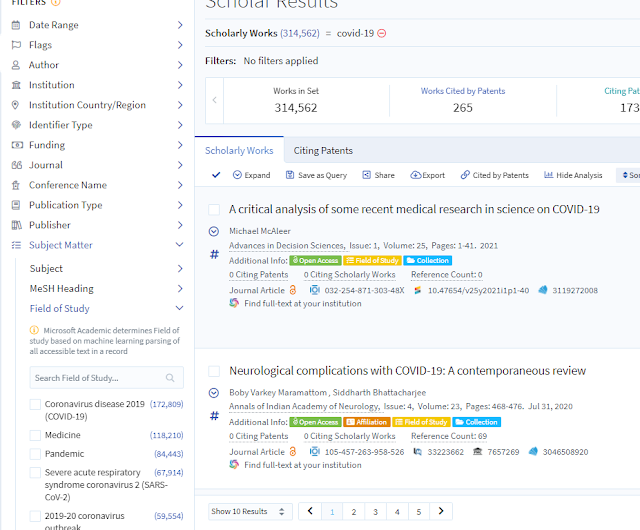

One of the greatest strengths of Lens.org is that it has very advanced and grainular filters , coupled with state of art visualization.

Nice grainular filters in Lens.org, how will the loss of MAG affect Lens?

This takes advantage of the fact that Lens.org combines a lot of grainular and comprehensive data from various sources , in particular affiliations and subjects.

One of the more common use cases I use Lens.org for, is to analyze output by affiliations (whether it is via a dashboard or other means) and I suspect this will become much less useful if MAG is not available as the lack of MAG will make filtering and visualization by affiliations to become less accurate, if not impossible.

The example fashboard below for example uses MAG's Field of study to classify by subject of papers authored by my institution.

Lens - Dashboard of OA status by "subject" (MAG's Field of study)

Also one of the techniques I currently use to identify review papers, systematic reviews etc involve using 2D search templates to search Lens.org. This relies partly on the excellent "Field of Study" from MAG.

Some Literature mapping tools will have to scramble for alternative sources

Another class of tools that has been enabled by the rise of open metadata is what I call Literature mapping tools.

I've written on them in various blog posts

3 new tools to try for Literature mapping — Connected Papers, Inciteful and Litmaps

Navigating the literature review - metaphors for tasks and tools - How do emerging tools fit in?

All these tools are only possible because of the open Scholarly metadata that is available, in particular open citations.

I'm not the only one to have noticed the explosion of tools and methods due to the easy availability of open Scholarly metadata, the LIS-Bibliometrics Conference 2021 had as it's theme - "New tools and new technologies: bibliometrics in action for a new decade"

Given the news that Microsoft Academic is shutting down, I'm looking to switch data sources. The two main contenders right now are @TheLensOrg and @SemanticScholar does anyone have any experience with either or both? https://t.co/Sz8o60VoGY

— Inciteful (@Inciteful_xyz) May 6, 2021

How will the loss of Microsoft Academic graph affects such tools? I have a table listing a dozen such tools in my - List of Innovative Literature mapping tools - which includes sources used.

The following listed use Microsoft Academic graph directly

The following listed use Semantic Scholar released Corpus and will not to need to do anything. However as mentioned, Semantic Scholar has a data partnership with MAG and going ahead it's coverage might be lessened.

Relatively few of these tools use Lens.org besides Citation Chaser (though it seems Lens has a policy of providing free API usage in free tools so this number might change) but similar to Semantic Scholar, quantity and quality of Lens.org data will probably be diminished once MAG is no longer updated.

Lastly as I mentioned besides the many bibliometrics projects that use MAG data - for example this Institutional Repository Manager was using Microsoft Academic data for citation counts, MAG is amazingly useful for systems that need to tailor their services to institutions.

It allows you to get a free and open source of metadata , that allows you to identify papers by institution affiliation which can be used to further refine the data or service you provide. This coupled with citation counts that is based on one of the largest cross-disciplinary citation index that isn't Google Scholar, means it is used far more than most would expect.

For example - the Unsub Service, which helps institutions make decisions on which bundles of journal titles to renew or cancel, relied on knowing how much of each title was already open access. But to provide a better projection it would need to take into account other data such as journal information, particular facts of each institution such as the authorships etc and Microsoft Academic Graph was by far the best source for this.

Similarly, tools like scite, Overton, Esploro and probably much more also use MAG for similar use cases (either affiliations or citation counts).

Reactions and Reflections

This is actually quite depressing. There is no guarantee any of the similar systems won't just suddenly disappear! How will we respond as a research community? https://t.co/QiNFyqx6WE

— Chun-Kai (Karl) Huang (@CK_Karl_Huang) May 6, 2021

Again, we see and example of the case where data being given an open license isn't sufficient. To be fair, given that MAG has an open license, systems like Lens.org and various literature review mapping tools that rely on it can continue to use the released data even after Dec 2021 and their systems will still work reasonably well at least at first.

Unfortunate, considering that their data was powering an increasing number of tools. We really need sustainable research metadata! https://t.co/lu5PEBGtDT

— Alberto Martín (@albertomartin) May 6, 2021

However with no new updates coming from Microsoft, the data will start to slowly degrade and coverage of newer articles will become less complete even in systems like Lens.org that also use alternative sources like Crossref and Pubmed/PMC.

While it is an exaggeration to say that open citations or open scholarly metadata is dead , it cannot be denied I think that the loss of MAG is going to be a fairly big blow at first.

But there is a sliver lining, Bianca Kramer says it best here.

MAG has demonstrated the value of (largely) openly available metadata - many other organizations could and did build on and (re)use these data.

Pulling the available data together remains challenging. Do we value this enough to support community-governed open infrastructure? https://t.co/BE9uYHOruL— Bianca Kramer (@MsPhelps) May 6, 2021

That Microsoft Academic Graph data has shown us the value and potential of such data, perhaps it is time we really start to seriously fund this in a sustainable way?

Could more community/government based projects like OpenAIRE, Internet Archive's Fatcat help fill the void?

A new hope?

As I write this news is just filtering out that OurResearch (formerly Impactstory), famed for running the unpaywall service has stated their intentions to build a "replacement for Microsoft Academic Graph" !

As they note, their Unsub service uses data from Microsoft Academic Graph, but they had a contingency plan if MAG went down and in fact have "been working on for a while now".

They acknowledge the work is only doable by building on the work of others, so this is likely going to be an aggregation of a large amount of open scholarly data + their own crawlers.

This is will only be possible because of the hard work already done by others who make their data open: @CrossrefOrg, @ResearchOrgs, @opencitations, @orcid, so many more... and of course @MSFTAcademic. Building on each other's shoulders again!#OpenScience #OpenData https://t.co/ivhGT9mhw2

— Our Research (@our_research) May 8, 2021

Similar to all their projects such as Unpaywall, the project will be open source, data will be available via APIs and data dumps.

Given the creditability Our Reseach has built up in the Scholarly communications ecosystem, this looks really promising.

They have a ambitious plan to launch by the end of the year, but warn that it will not be a 'perfect replacement' which is fair, given they are unlikely to have the resources of Microsoft Research or experience at least at first.

They have asked for feedback about use cases and I highly encourage anyone who has used MAG to some degree to do the survey.

As it stands, it is likely this replacement , which as it time of writing has no name yet is likely going to focus on how MAG is used in Unsub, which is probably citation relationships, author/affiliations and journal deduplication/normalization.

Additional details

Description

In the last month, there were two interesting developments that caused quite a stir in my twitter feeds (see discussions here and here).

Identifiers

- UUID

- 44cadd07-58d7-4a14-8582-54f9925a9a0e

- GUID

- 164998207

- URL

- https://aarontay.substack.com/p/why-open-alone-is-not-enough-microsoft

Dates

- Issued

-

2021-05-09T19:16:00

- Updated

-

2021-05-09T19:16:00