My favourite explainers of Transformers, LLMs and related technology

Though my blog focuses on academic discovery and retrieval, these days you can't really understand those topics without grappling with concepts like Transformer models, agents, and reasoning.

Obviously, keeping up at the deepest technical level is impossible—at least for people like me! But I do strive for a general understanding: how these models work, how they're trained, and what the implications are.

I find that diving straight into technical papers on arXiv can be very daunting, especially without any formal background in neural networks or deep learning.

Over time, I've become more comfortable reading preprints from the information retrieval side, but even then, my understanding is far from perfect, and I struggle a bit when it goes to details on training.

Fortunately, over the years, I've discovered a few fantastic "teachers" who explain these complex topics in a way that's accessible, engaging, and often beautifully visualized. I wanted to share some of my favorites here.

1. Jay Alammar & Maarten Grootendorst

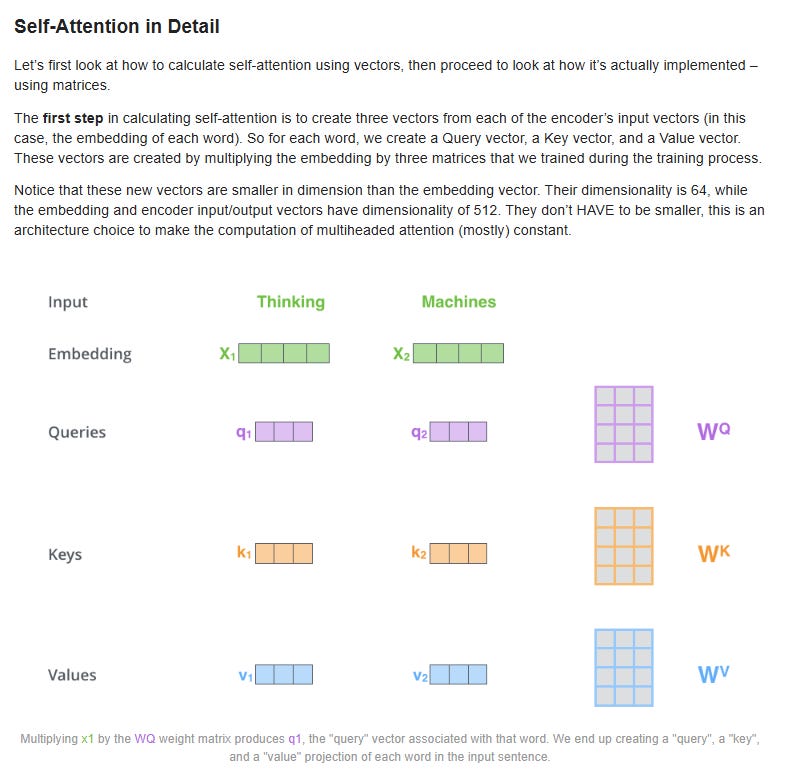

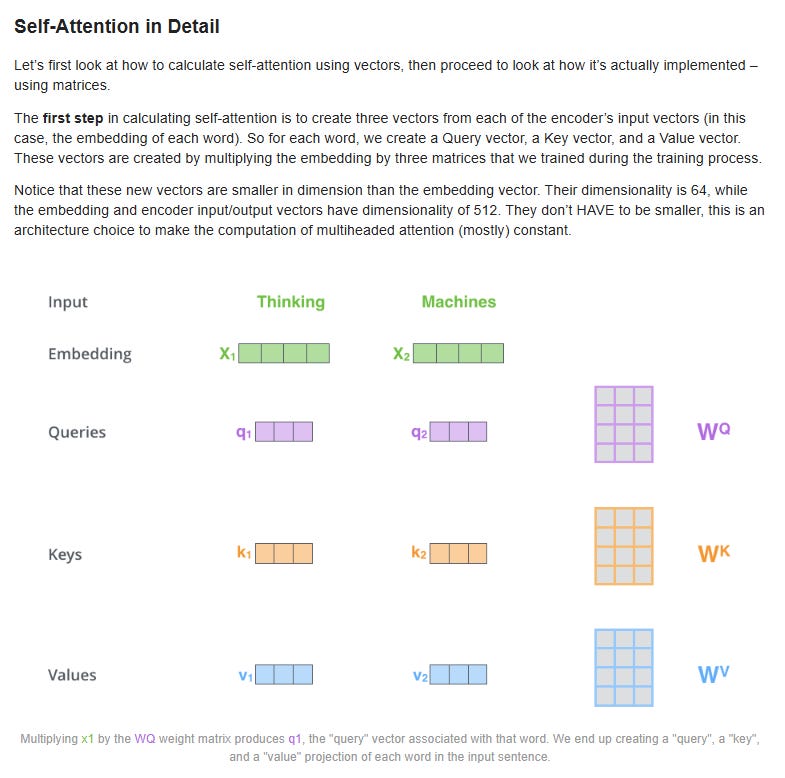

I still remember, back in 2020/2021 when GPT-3 was making waves, I was trying to wrap my head around Transformers (and even more basic neural models like Word2Vec). That's when I stumbled upon Jay Alammar's The Illustrated Transformer.

It's a classic now—an amazing attempt to break down how Transformers work, with striking and intuitive visuals. I won't pretend I understood it all right away (and, to be honest, some details still remain hazy now), but it was a fantastic starting point.

Explaining Self-attention in the Illustrated Transformers

Since then, Jay and Maarten Grootendorst have teamed up to release a book: Hands-On Large Language Models.

If you're a librarian looking to really get to grips with the basics of LLMs, I can't recommend it enough. It's not just theory—starting from Chapter 4, they get hands-on with real-world applications (text classification, semantic search, etc.), and later chapters cover advanced use cases like fine-tuning.

Of course, the field keeps evolving, but both Jay and Maarten continue to publish fantastic, visual explainers on their respective Substacks. Topics range from agents to reasoning models, always with that same approachable style.

Jay Alammar: Language Models & Co (and his original blog)

Sample post: The Illustrated DeepSeek-R1

Maarten Grootendorst: Exploring Language Models

Sample post: A Visual Guide to Reasoning LLMs

2. Cameron R. Wolfe

I don't recall exactly when I first came across Cameron Wolfe, but he has become one of my go-to sources for clear, in-depth explainers and roundups.

Currently, Senior ML Scientist at Netflix - Cameron covers a vast range of topics, including Mixture-of-Experts (MoEs), reasoning models, supervised fine-tuning (SFT) for LLMs, even fundamentals like decoder architectures. His style is similar to Jay's, but he tends to go deeper, incorporating more formulas and citations from the latest research.

He also dedicates significant time to discussing how to train and deploy LLMs in industrial settings, covering concepts like RLHF, PPO, and Basics of Reinforcement Learning for LLMs

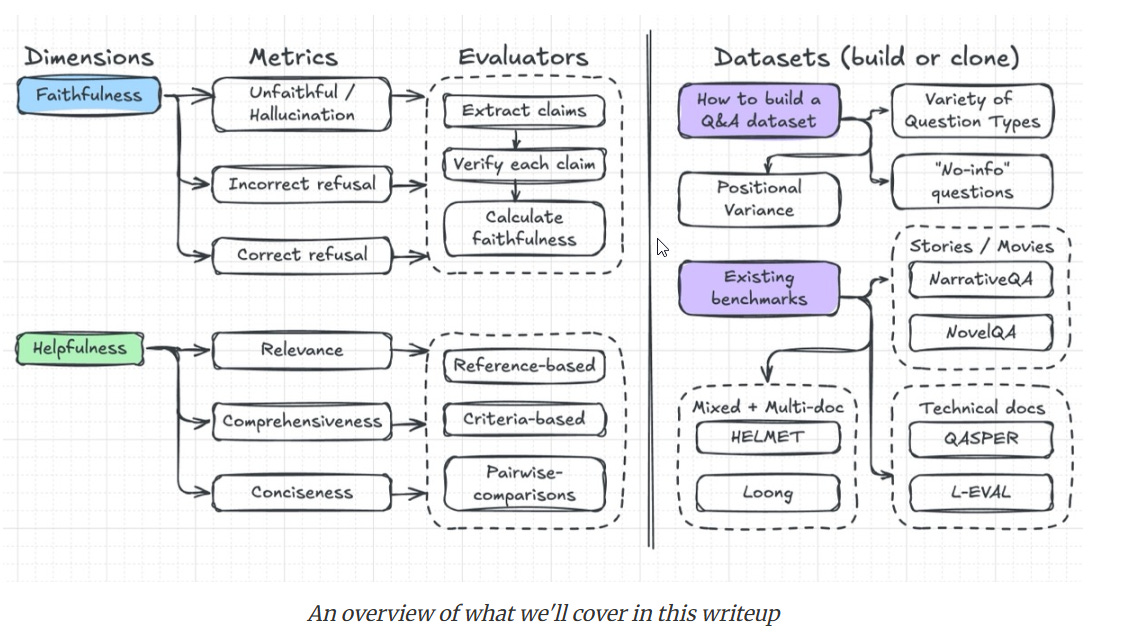

Another unique point is his articles almost always have a large figure or illustration trying to explain the concept in some depth. Below shows the one for Finetuning LLM Judges for Evaluation

Finetuning LLM Judges for Evaluation

He, too, has a Substack:

Cameron R. Wolfe: Deep Learning Focus

Sample post: Finetuning LLM Judges for Evaluation

3. Eugene Yan

I likely discovered Eugene Yan through Twitter, and his work is on par with Cameron's to the point that I sometimes confuse their posts. His writing is technical and practical which is fitting given his current role as Principal Applied Scientist at Amazon.

Evaluating Long-Context Question & Answer Systems[

While Eugene doesn't publish as many explainers as some of the others on this list, they are excellent when he does. You can find his writings on his website.

Website: https://eugeneyan.com/

Sample Post: Evaluating Long-Context Question & Answer Systems[

4. Sebastian Raschka

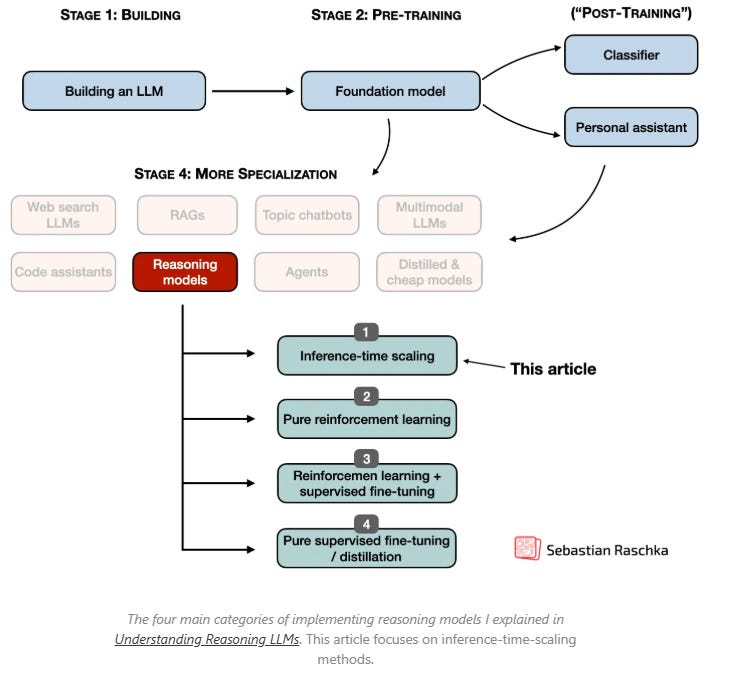

A more recent discovery for me on Substack was by Sebastian Raschka - a LLM Research Engineer with over a decade of experience in artificial intelligence.

I was particularly drawn in by his piece, The State of LLM Reasoning Model Inference

He is also on Substack

Sebastian Raschka: Ahead of AI

Sample post - The State of LLM Reasoning Model Inference

Conclusion

My understanding of LLMs and Transformer models is admittedly incomplete and likely flawed, but it would be much worse without the opportunity to learn from these five authors.

The great thing is that these authors often cover the same topics, such as Agents, Reasoning Models, and DeepSeek (albeit to different depths), providing an opportunity to revisit subjects from multiple angles. This can lead to a richer understanding as they may focus on different details or even present contrasting views—after all, these are cutting-edge topics, and even experts can disagree.

This brings up an important question: as a librarian or researcher who just wants to use AI to aid your research, do you need to know this much?

I can't answer that for you, as your goals may differ from mine. For me, I have a genuine desire to understand things as deeply as I can, even if much of what I learn doesn't have immediate practical applications.

Additional details

Description

Though my blog focuses on academic discovery and retrieval, these days you can't really understand those topics without grappling with concepts like Transformer models, agents, and reasoning.

Identifiers

- UUID

- 3a39274a-a108-4048-9ae2-adc1951205f4

- GUID

- 168690345

- URL

- https://aarontay.substack.com/p/my-favourite-explainers-of-transformers

Dates

- Issued

-

2025-07-21T18:25:51

- Updated

-

2025-07-21T18:25:51