NLU vs. NLG: Unveiling the Two Sides of Natural Language Processing

Understanding the Power and Applications of Natural Language Processing

Author

- Dhruv Gupta (ORCID: 0009–0004–7109–5403)

Introduction

We are living in the era of generative AI. In an era where you can ask AI models almost anything, they will most certainly have an answer to the query. With the increased computational power and the amount of textual data, these models are bound to improve their performance. However, these Large Language models (LLMs) are often confused with Natural Language Processing (NLP) which is not correct. With the growth in the prevalence of these LLMs, it is very important to understand what NLP is. In this article, we will have a look at what NLP is and what it is not. Additionally, we will also have a look at its various applications and evolutions.

What is Natural language processing?

Natural language processing (NLP) as the name suggests is an attempt to make computers understand and manipulate human language. The idea of NLP first came out in the 1950s and has evolved significantly since then. It encompasses a set of algorithms that helps the machine understand, manipulate, and generate human language. This includes basic spelling-checking software chatbots to large natural language generation tools.

However, it is not that simple. Any human language is filled with ambiguities that make it extremely difficult to write NLP rules for it. Homonyms, homophones, touches of sarcasm and other variations in a sentence structure make it rather difficult for programmers to create natural-language software. Additionally, in the early stages of NLP, there was limited textual data and computational power was negligible in comparison to what we have today. However, the programmers had an idea about the potential of NLP and its vast array of applications. Some of these applications are:

- Speech Recognition: It is the process of converting speech data into textual data. The challenging part of speech recognition is that everyone has a different way of speaking, different slangs and accents.

- Part of Speech tagging (POS): It is the process of grammatical tagging in a sentence. It essentially tags different words in a sentence as nouns, verbs, adverbs etc.

- Named Entity Recognition (NER): It is the process of tagging useful words as entities. For example: words such as India, USA are tagged as locations and words like Fred are tagged as names.

- Natural language Generation: It is the process of creating human language given some prompt or input. All the LLMs used for generative AI fall under this category.

Even though all of the above-mentioned applications use different NLP technologies, they can be broadly classified into two major categories: Natural Language Understanding (NLU) and Natural Language Generation (NLG).

What is Natural Language Understanding (NLU)?

Natural language Understanding (NLU) is the subset of NLP which focuses on understanding the meaning of a sentence using syntactic and semantic analysis of the text. Understanding the syntax refers to the grammatical structure of the sentence whereas semantics focus on understanding the actual meaning behind every word.

Human language is complicated. It is filled with complex, subtle and ever-changing meanings which makes it harder for computers to grasp it. NLU systems make it possible for organisations to create products and tools which not only understand the words but also the hidden meaning behind them. For example, let there be two sentences:

- Arpit loves dogs.

- Arpit loves chicken.

In the second sentence, Arpit most probably loves to eat chicken. However, in the first sentence, Arpit loves to be around dogs. There is a definite ambiguity around the word love. It is, therefore, the responsibility of the NLU system to understand it.

How does natural language understanding work?

NLU works on two fundamental concepts: intent and entity recognition. Intent recognition focuses on determining the user's sentiment and their intention. It allows the model to understand the meaning of the text. The second concept, entity recognition, focuses on recognising the different entities involved in the sentence and the relationship between them. There are broadly two types of entities: named and numeric entities.

Therefore, whenever an NLU system receives an input, it splits it into tokens (individual words). These tokens are run through a dictionary which can identify different parts of speech. These tokens are then analysed for their grammatical structure including their role and different possible ambiguities.

Applications of natural language understanding

NLU plays a pivotal role in NLP. It has a vast array of applications in our daily lives. Some of these applications are:

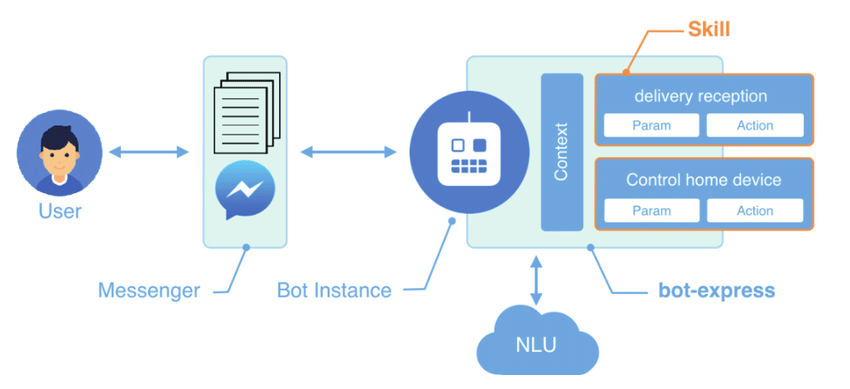

- Chatbots: NLU is the key technology behind chatbots. Chatbots that provide answers to FAQs are accomplished by using NLU. Several layers of different NLU technologies such as feature extraction and classification, entity linking and knowledge management are utilised in the backend.

- User Sentiment and Intent: Big organisations use NLU-based technologies to analyse customer sentiments and intents. For example: social media companies use sentiment analysis parser to analyse the comments on a social media post. It helps them reduce hate crimes and trolling-based comments.

- Email Filters: Email filters are one of the most basic initial applications of NLU. It started out with companies filtering spam emails but has now shown advancements. Most of the email service providers these days are able to categorise the emails into different categories such as primary, social, promotion etc.

What is Natural Language Generation (NLG)?

Natural language generation (NLG) as the name suggests enables computer systems to write, generating text. It focuses on generating a human language text response based on some input data. Initially, NLG systems implemented several set templates to generate text. Based on some input data or query, NLG systems would generate the text. However, the generated text followed a typical pattern. Nevertheless, with the increase in computational power, available textual data and new deep learning technologies coming to the forefront, these NLG models have become very powerful.

How does natural language understanding work?

Whenever we ask generative models to create a text phrase, it seems like a magic trick. However, it is just all the natural language learning techniques working together to generate the response. These technologies include:

- Language Model: This is the machine learning bit of any NLG machine. This part of the pipeline contains a LLM that has been trained on heaps of data and has learnt various nuances of the language. This further helps the model to generate the required text.

- Natural Language Understanding: The NLU part of the model helps it to comprehend both the input and output data. It makes sure that the generated data is concurrent, accurate and makes sense.

- Natural language processing: The NLP part of the model breaks down the input data, identifies different parts of speech and analyses the syntax and different dependencies.

Applications of natural language generation

With the evolution of generative AI, NLG has seen a lot of applications. Some of those application are as follows:

- Content Generation: NLG models can help in content generation. Anything from a small paragraph to social media posts to summaries of long text, NLG can do it all. A lot of large companies are coming up with newer content generation models which have shown immaculate performance.

- Data Analysis: Large data science companies are using NLG models to analyse and summarise large corpora of data. Most of the new NLG models can comprehend the numerical data well, thereby producing highly accurate reports.

- Automated Reporting: NLG can turn raw data into clear, concise and insightful reports. Analysts can use the content generation power of NLG models to produce clear, concise and accurate reports.

- Healthcare: With the increasing healthcare data and workload on doctors, NLG models can be used to generate personalised treatments for patients. This automation process will not only reduce the workload for doctors and medical staff but will also speed up the process.

Conclusion

In summary, Natural Language Processing is the technique used to process human language by computers. Natural Language Processing technology can be classified into two broad categories: natural language understanding and natural language generation. The natural language understanding focuses more on understanding the structure of the text data. However, natural language generation focuses on using natural language understanding to generate and create content. LLM is one of the applications of natural language generation with the more extended use among people nowadays.

References

- Qualtrics. What is natural language generation (NLG)? [online] Available at: https://www.qualtrics.com/au/experience-management/customer/natural-language-generation/.

- IBM (2023). What is Natural Language Processing? | IBM. [online] IBM. Available at: https://www.ibm.com/topics/natural-language-processing.

- Kavlakoglu, E. (2020). NLP vs. NLU vs. NLG: the differences between three natural language processing concepts. [online] IBM Blog. Available at: https://www.ibm.com/blog/nlp-vs-nlu-vs-nlg-the-differences-between-three-natural-language-processing-concepts/.

- Vaniukov, S. (2024). NLP vs LLM: A Comprehensive Guide to Understanding Key Differences. [online] Medium. Available at: https://medium.com/@vaniukov.s/nlp-vs-llm-a-comprehensive-guide-to-understanding-key-differences-0358f6571910#:~:text=NLP%3A%20Improves%20customer%20experience%20through.

- Gillis, A. What is Natural Language Understanding (NLU)? | Definition from TechTarget. [online] Enterprise AI. Available at: https://www.techtarget.com/searchenterpriseai/definition/natural-language-understanding-NLU#:~:text=Natural%20language%20understanding%20(NLU)%20is.

Additional details

Description

Understanding the Power and Applications of Natural Language Processing Author Dhruv Gupta ( ORCID: 0009–0004–7109–5403) Introduction We are living in the era of generative AI. In an era where you can ask AI models almost anything, they will most certainly have an answer to the query. With the increased computational power and the amount of textual data, these models are bound to improve their performance.

Identifiers

- UUID

- d462cb01-6e96-47f2-9ae8-03e2eac76d3d

- GUID

- https://medium.com/p/f58aeeb908df

- URL

- https://medium.com/@researchgraph/natural-language-processing-f58aeeb908df

Dates

- Issued

-

2024-04-02T01:42:52

- Updated

-

2024-04-02T01:42:52