Google Bard - a first impression

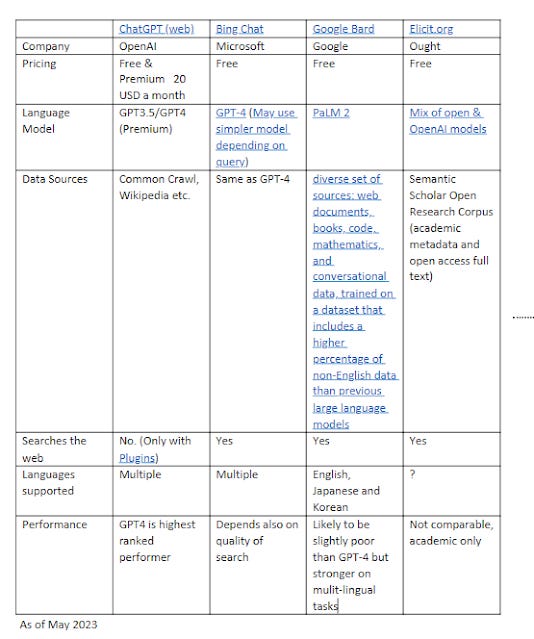

ChatGPT by OpenAI is the most famous and most used auto regressive decoder Transformer based Large Language Model. However, it is not the only large language model, besides open ones like BLOOM, Google has developed their own versions include LaMDA, and Pathways Language Model (PaLM) both in 2022, but had never released them any of them, until now.

Google Bard was first announced in February 2023 as an an "experimental conversational AI service, powered by LaMDA" This was widely seen as a reaction to the popularity of OpenAI's ChatGPT.

Availability of Google Bard was first limited by a wait list and available only in the US (English only) but in May 2023 it was made available globally (180 countries) with no wait list. This version of Google Bard is now based on PaLM 2 and works also in Japanese and Korean with more languages to come.

This announcement was made using Google I/O 2023 where a host of other AI related improvements and implementations were made. Some announcements such as the new Search Generative Experience feature available as a experimental feature at search labs where they blend normal search results with generative content look fascinating but currently availability is restricted to the US only.

In this piece, I will give my first impressions of Google Bard which is now available in Singapore and do a brief comparison to OpenAI's ChatGPT, Bing Chat (which integrates Bing and GPT4) (see my review) and other similar systems.

Does Google Bard search the web?

There are currently two main ways large language models are being used to query for information. The first main way is to use it directly sans search. For example, when you type into ChatGPT the prompt, "Who won the FIFA World Cup in 2022", the system answers by outputting text based on the weights in the neural net it had learnt during the training process.

Because ChatGPT was trained based on data up to only 2021, it is of course unable to answer the question.

The solution around this is to add a search engine to retrieve text to support answers generated by the AI. For example, OpenAI's ChatGPT released plugins that extend the capabilities of ChatGPT and one of the plugins adds searching the web. However, access to this is limited as of time of writing, so most people would encounter such systems either by using Bing Chat, Perplexity or academic versions like Elicit.org which are search engines that use AI to generate answers.

So, the question is does Google Bard search the web?

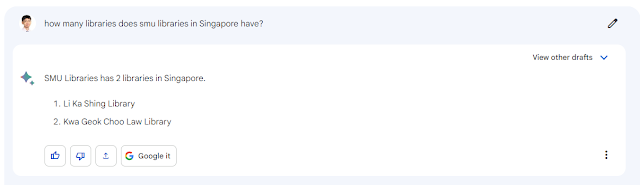

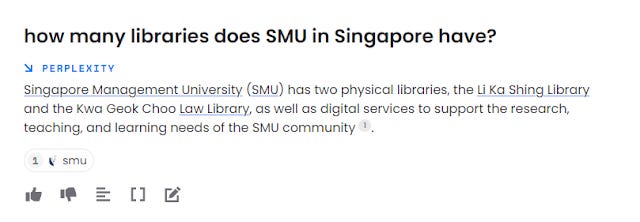

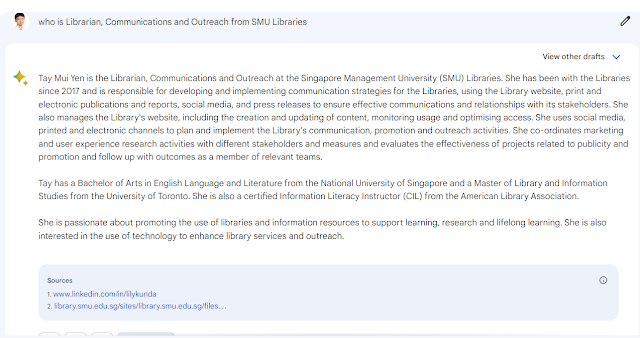

My first thought was it did not. Look at the output below.

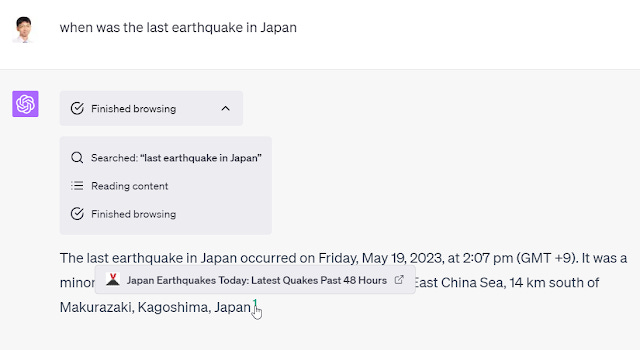

While Bard correctly answered the query. It did not show any sign it was searching. Compare to the answer given by Bing Chat below.

In any case, was it really searching the web, perhaps it just outputs answers based on its pretrained data, then likely it would have problems pointing to source.

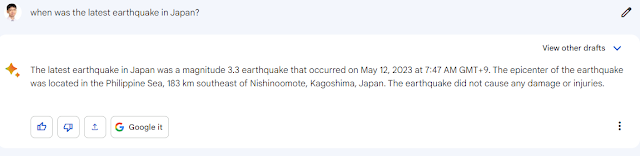

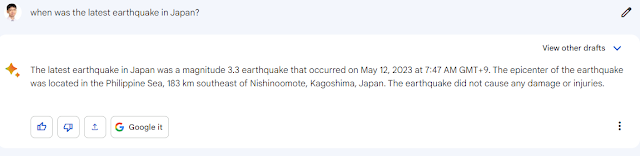

But when I tested it by asking when the last earthquake in Japan was, it got it right, pinpointing information that was only a few hours old at the time of the prompt.

It was clearly using the web to get the newest information and based on the text I can even guess which webpage it got the answer from. But there was no cite.

Google Bard's citations are inconsistent

Up to now all the systems that I have seen that combined search engines with Large Language models or "Retriever Augmented Language Model" would cite consistently the answers it's generated. Besides Bing Chat, there is also Perplexity.ai. While it doesn't have a notice indicating it is searching, like Bing Chat, it provides citation for each sentence output.

Even the new browse plugin for ChatGPT (see below), also cites consistently (though it seems at the paragraph level), we will review this in the next blog post.

Interestingly Bard often does not cite at all.

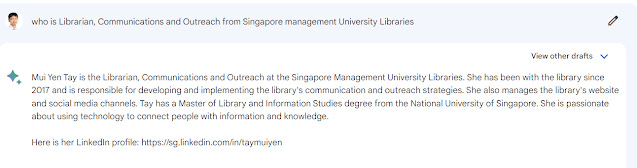

To be fair, for some answers, Bard does cite or at least lists sources.

The second output has a lot of wrong data, basically because I did not spell out "SMU" and there are various another "SMU"s besides the one in Singapore, so it is confused.

But that's why cites are so important to help you verify.

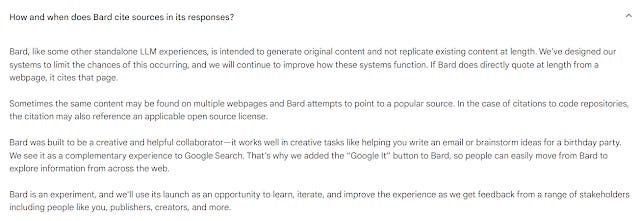

So, what's going on? The Bard FAQ helps. (See below).

Three main points about when it cites

If Bard does "directly quote at length from a webpage"

"In the case of citations to code repositories, the citation may also reference an applicable open-source license".

"Sometimes the same content may be found on multiple webpages and Bard attempts to point to a popular source.

While the first two points are reasonable, the third is odd that "sometimes" when there are multiple sources then Bard will cite the popular one. But why only sometimes?

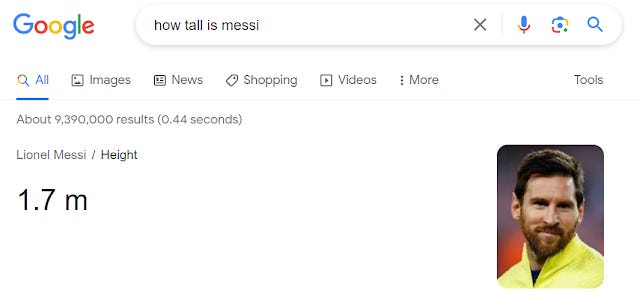

To be fair, currently the basic Google Search gives direct answers in two situations, and it doesn't always cite either. The first is when the result comes from the Google Knowledge Graph. In that case it doesn't cite either. See example below

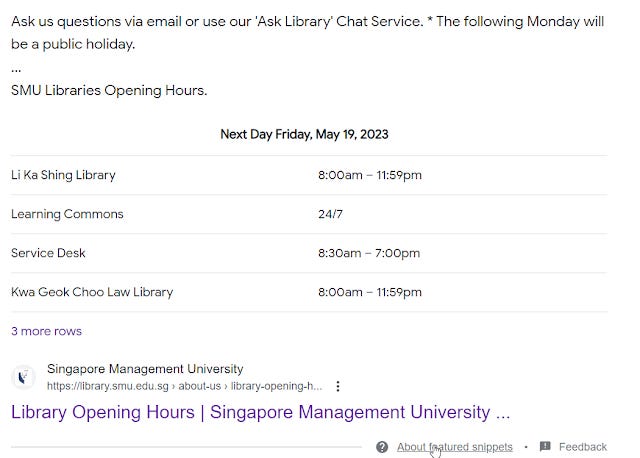

However, in the second case where Google gives direct answers, it extracted answers from a webpage with a feature called featured snippets, in which case it does link to the webpage.

See example below.

One can speculate why Google Bard doesn't cite as consistently as Bing Chat or Perplexity (I won't) but it certainly does make verification of generated answers a lot more difficult and I'm disappointed at this.

I wonder if Search Generative Experience which is where their work with generated AI is meant to blend with their flagship Google search is where the main effort will be, and Bard might just be a testbed

Google Bard vs ChatGPT

I've only tried out Bard for one week but currently, I am struggling to find a good use for it.

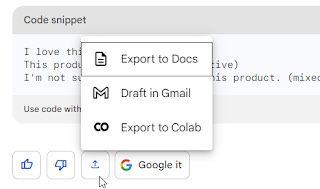

Google Bard has some extra minor features not in ChatGPT or Bing Chat such as export of code to Google colab but none seem particularly important.

One advantage Google Bard has over ChatGPT is that it can search the web for latest information, while ChatGPT does not allow that unless you pay for ChatGPT plus and use plugins. Still, you could use Bing Chat or Perplexity.ai which leverage the latest GPT-4 models and search for that.

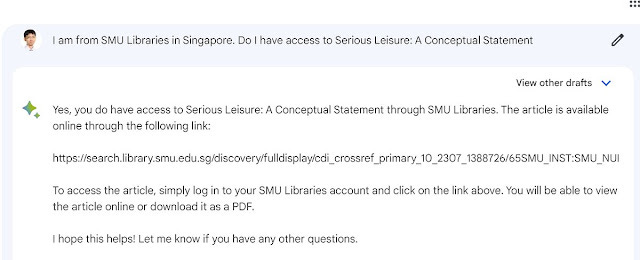

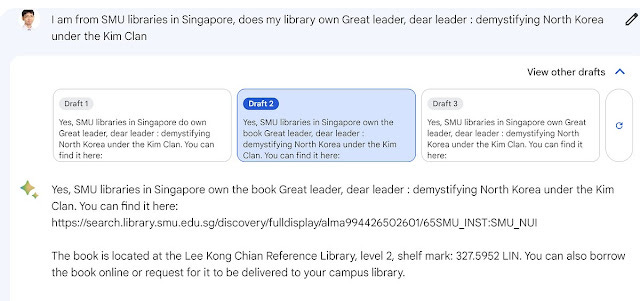

On the other hand, you may prefer the fact that Bard uses Google search, while Bing and Perplexity uses Bing as the search engine. For example, my libraries' catalog records are indexed in Google and not Bing, so Bard can answer questions about item availability.

On the other hand, it's inconsistent citing of sources means I prefer Bing Chat and Perplexity.ai to it.

Google Bard is pretty fast which is nice, you can even see different drafts of answers by clicking on "view other drafts" and instantly see them (as opposed to the time it takes ChatGPT to regenerate answers)

But I guess, the question you want me to answer is , is Google Bard's answers at the same or even higher level than ChatGPT 3.5 or even GPT-4.

Unfortunately, my own personal experience is the quality of Bard's answers are always worse than GPT-4 and maybe even ChatGPT (GPT-3.5). It tends to hallucinate a lot more even when it can access the right webpage.

For example, when I ask it about availability of an item from my library it is often able to find the right record and even point to the URL, but it would often make up details about location and versions available! (In the image above, it gives a madeup shelf mark among other problems)

This is also consistent with most of the chatter on the web, that Bard isn't as good as GPT-4 and possibly even GPT-3.5. There might be some suggestions that it is pretty good at coding, but I haven't noticed much here.

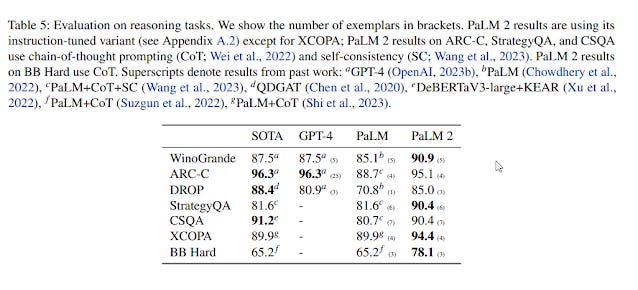

Even if you look at the PaLM2 technical report that Bard uses , one can't help but notice the results don't quite favour PaLM2.

For example, on page, Table 5 shows PaLM2 is competitive with GPT-4 on reasoning tasks, but if you look carefully, PaLM2 uses chain-of-thought-prompting which is known to give a substantial boost to results and it is clearly unfair to test it like this since GPT-4 was not compared similarly.

Other tables that follow show a similar pattern where the Google's model is tested with Chain of Thought or similar prompting techniques to give it a boost in results.

Finally, it's unclear if the Bard model we use is exactly the same or the largest/best PaLM2 model Google has. For example, the paper was detailing PaLM2's "significant multilingual language", yet as of time of writing, Bard only supports English and two other languages.

Conclusion

Overall, Google's first public entry into the Generative AI/Large Language Model game isn't groundbreaking.

It is unclear to me what Google's plans are, on how to integrate search with this technology (Search Generative Experience ) and they are perhaps unclear either.

During Google I/O 2023 there was mention of a new LLM that was already in training called Gemini. So perhaps this is what we should be waiting for?

Additional details

Description

ChatGPT by OpenAI is the most famous and most used auto regressive decoder Transformer based Large Language Model.

Identifiers

- UUID

- a8c4dca9-1e02-4807-8a84-63600846ce80

- GUID

- 164998126

- URL

- https://aarontay.substack.com/p/google-bard-first-impression

Dates

- Issued

-

2023-05-21T20:25:00

- Updated

-

2023-05-21T20:25:00