In November of 2022, I created a table comparing GPU programming models and their support on GPUs of the three vendors (AMD, Intel, NVIDIA) for a talk. The audience liked it, so I beefed it up a little and posted it in this very blog.

In November of 2022, I created a table comparing GPU programming models and their support on GPUs of the three vendors (AMD, Intel, NVIDIA) for a talk. The audience liked it, so I beefed it up a little and posted it in this very blog.

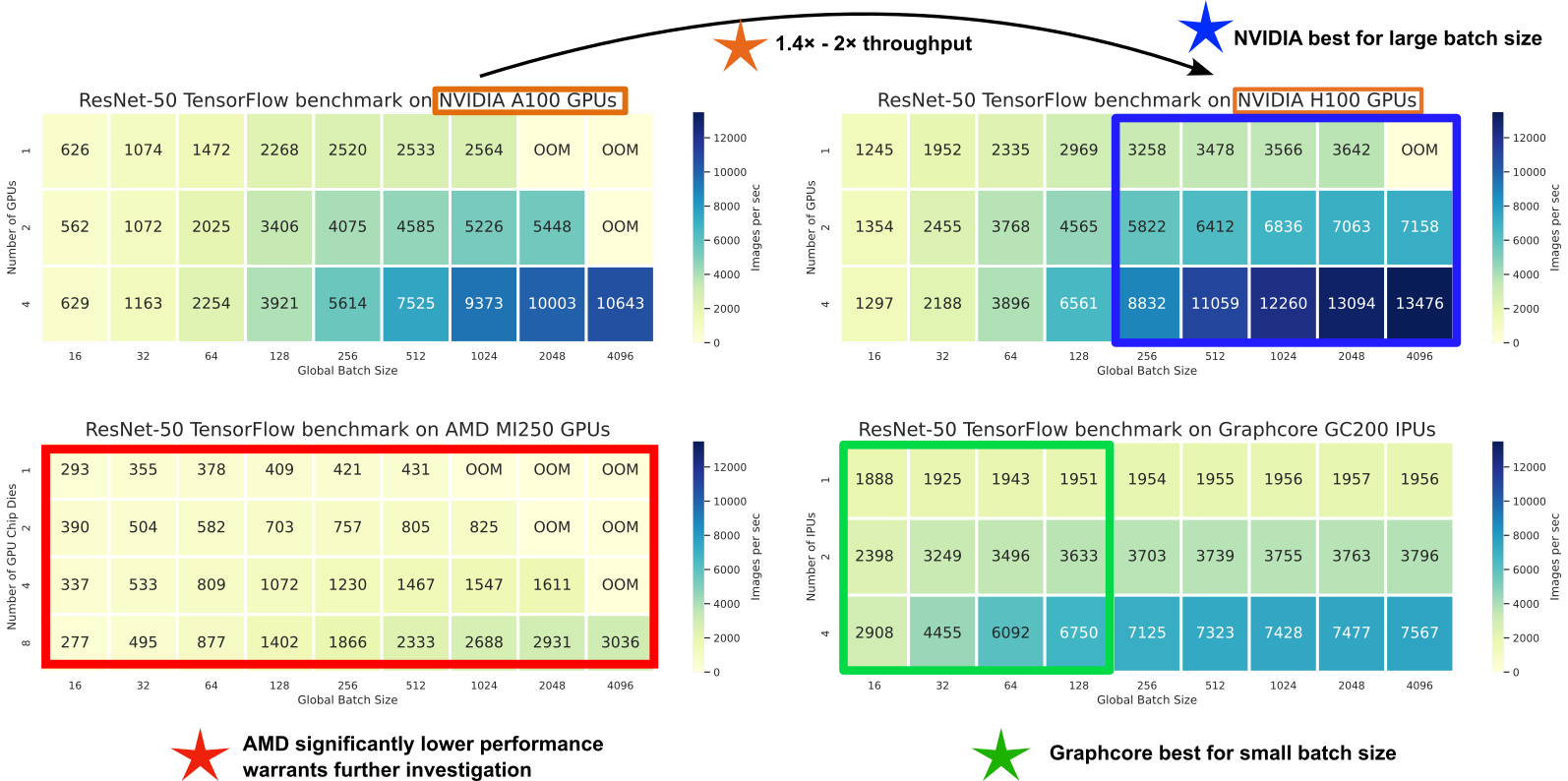

The Supercomputing Conference 2023 took place in Denver, Colorado, from November 12th to 17th. For the Women in HPC workshop, we submitted a paper, which focused on benchmarking different accelerators for AI. The paper was accepted and I was invited to hold a lightning talk to show the work, spun off our OpenGPT-X project.

** Poster in institute repository:** http://dx.doi.org/10.34734/FZJ-2023-04519 At the ISC High Performance Conference 2023 a little while ago, we presented a project poster on the SCALABLE project, embedded at the end of this post. The work was also presented as a paper at the the Computing Frontiers 2023 conference previously.

Environment Setup Enabling UCC in OpenMPI Enabling NCCL in UCC (Team Layer Selection) All The Variables Results 1. Plain OpenMPI 2. OpenMPI with UCC 3. OpenMPI with UCC+NCCL Scaling Plots Average Latency Bus Bandwidth Comparing MPI, UCC, UCC+NCCL Comparing UCC+NCCL, NCCL Summary Technical Details This post showcases how to use the highly optimized, GPU-based collectives of Nvidia’s (NCCL library) by using it as a UCC backend through MPI.

MSA Concept MSA Software Building Blocks Workshop Exercises 1: Hello World! 2. GPU Hello World! 3: CPU-GPU Ping Pong Slides On May 29, we held a workshop about using the Modular Supercomputing Architecture (MSA) together with project partners from ParTec.

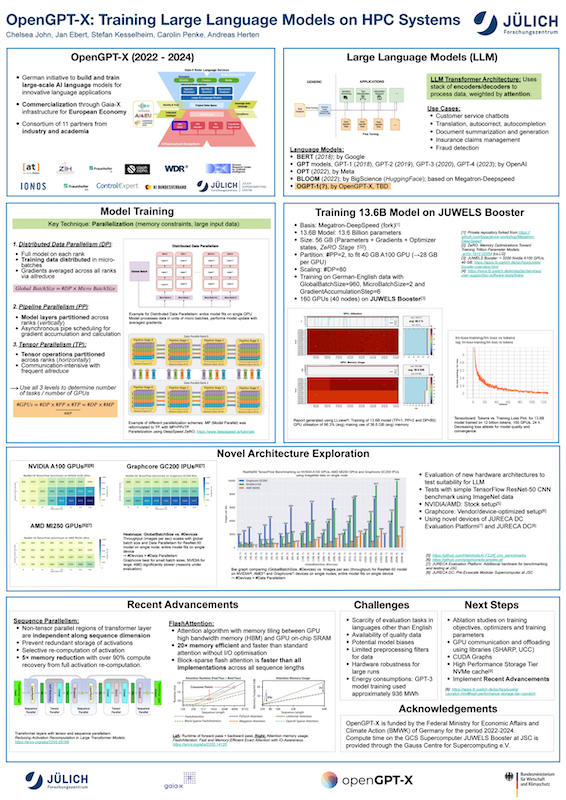

** Poster publication:** http://hdl.handle.net/2128/34532 The ISC High Performance Conference 2023 was held at Hamburg, Germany from 21st May to 25th May. At the conference, we presented a project poster on the OpenGPT-X project, outlining the progress and initial exploration results. The poster was even featured in HPCWire’s May 24 recap of ISC within the AI segment!

For a recent talk at DKRZ in the scope of the natESM project, I created a table summarizing the current state of using a certain programming model on a GPU of a certain vendor, for C++ and Fortran. Since it lead to quite a discussion in the session, I made a standalone version of it with some updates and elaborations here and there.

TL;DR: I held a HPC intro talk. Slides are below. In MAELSTROM, we connect three areas of science: 🌍Weather and climate simulation with 🤖Machine Learning methods and workflows using 📈HPC techniques and resources . Halfway into the project, we held a boot camp at JSC to teach this Venn diagram to a group of students a few days ago. Some were ML experts, but had never used a HPC system.