“O novo é sempre um desafio. E para nós, organizadoras, a construção desta obra foi um desafio estimulante a novas aventuras. Saímos da teoria e colocamos em prática a organização de um livro construído por muitas mãos.

Postagens de Rogue Scholar

Divulga-CI – Revista de Divulgação Científica em Ciência da Informação Volume 2, Número 5 – Maio de 2024. Editada em abril de 2024. Última edição em maio de 2024. Publicada em 9 de maio de 2024. Disponível em: https://www.divulgaci.unir.br https://www.divulgaci.labci.online Laboratório Aberto Contexto e Informação Universidade Federal de Rondônia Universidade Federal do Estado do Rio de Janeiro O post Expediente apareceu primeiro em Divulga-CI.

An improvement architecture superior to the Transformer, proposed by Meta Author · Qingqin Fang ( ORCID: 0009–0003–5348–4264) Introduction Recently, researchers from Meta and the University of Southern California have introduced a model called Megalodon. They claim that this model can expand the context window of language models to handle millions of tokens without overwhelming your memory.

Understanding the Evolutionary Journey of LLMs Author Wenyi Pi ( ORCID : 0009–0002–2884–2771) Introduction When we talk about large language models (LLMs), we are actually referring to a type of advanced software that can communicate in a human-like manner. These models have the amazing ability to understand complex contexts and generate content that is coherent and has a human feel.

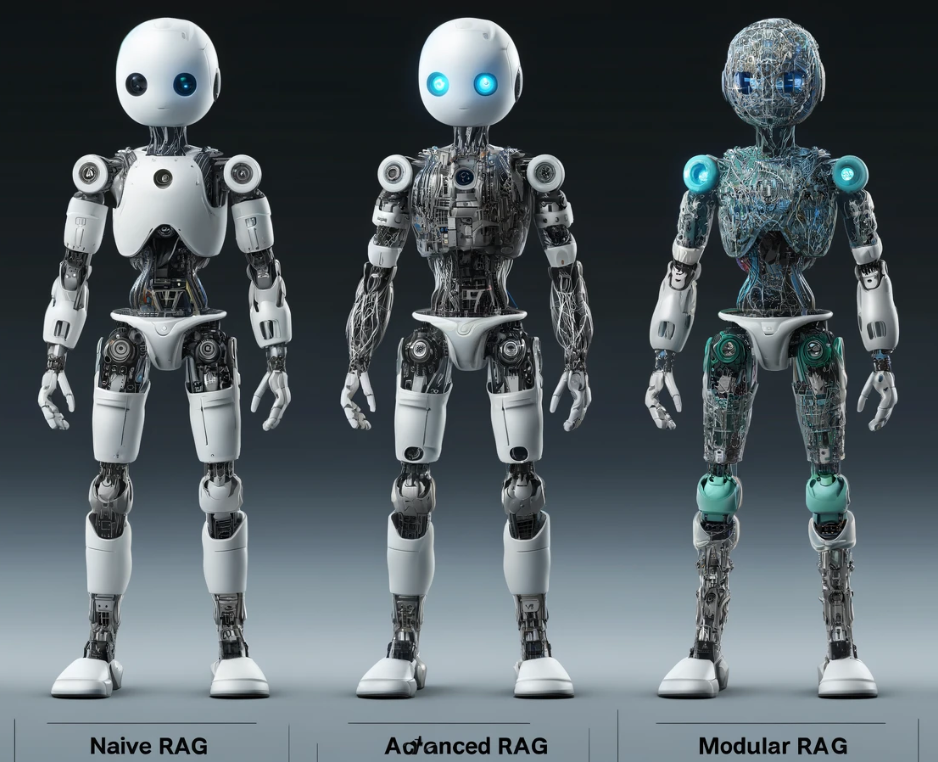

From Naive to Modular: Tracing the Evolution of Retrieval-Augmented Generation Author · Vaibhav Khobragade ( ORCID: 0009–0009–8807–5982) Introduction Large Language Models (LLMs) have achieved remarkable success.

Supervised Fine-tuning, Reinforcement Learning from Human Feedback and the latest SteerLM Author · Xuzeng He ( ORCID: 0009–0005–7317–7426) Introduction Large Language Models (LLMs), usually trained with extensive text data, can demonstrate remarkable capabilities in handling various tasks with state-of-the-art performance. However, people nowadays typically want something more personalised instead of a general solution.

Attention mechanism not getting enough attention Author Dhruv Gupta ( ORCID : 0009–0004–7109–5403) Introduction As discussed in this article, RNNs were incapable of learning long-term dependencies. To solve this issue both LSTMs and GRUs were introduced. However, even though LSTMs and GRUs did a fairly decent job for textual data they did not perform well.

Large Language Models for Fake News Generation and Detection Author Amanda Kau ( ORCID : 0009–0004–4949–9284) Introduction In recent years, fake news has become an increasing concern for many, and for good reason. Newspapers, which we once trusted to deliver credible news through accountable journalists, are vanishing en masse along with their writers.

Skip to main content :::::::::::::::::: {#app-content .styles__appChildrenContainer___[chunkhash-base64-5] role=“main”} Reproducibility in Neuroscience – Mats van Es on FieldTrip reproducescript JOSSCast: Open Source for ResearchersBy The Journal of Open Source SoftwareMay 02, 2024 Share 00:00 26:23 :::::::::::::::::: Subscribe Now: Apple, Spotify, YouTube, RSS Mats van Es joins Arfon and

The Three Oldest Pillars of NLP Author Dhruv Gupta ( ORCID : 0009–0004–7109–5403) Introduction Natural Language Processing (NLP) has almost become synonymous with Large Language Models (LLMs), Generative AI, and fancy chatbots. With the ever-increasing amount of textual data and exponential growth in computational knowledge, these models are improving every day.