Stevan Harnad’s “Subversive Proposal” came of age last year. I’m now teaching students younger than Stevan’s proposal, and yet, very little has actually changed in these 21 years.

Stevan Harnad’s “Subversive Proposal” came of age last year. I’m now teaching students younger than Stevan’s proposal, and yet, very little has actually changed in these 21 years.

**The Giga-Curation Challenge 2016 **Annotation and curation are under appreciated parts of scholarship, because they allow someone to provide additional insight into a work, and are needed for efficient and easy re-use.

I was interested in the analysis by Frontiers on the lack of a correlation between the rejection rate of a journal and the “impact” (as measured by the JIF). There’s a nice follow here at Science Open.

tl;dr: Data from thousands of non-retracted articles indicate that experiments published in higher-ranking journals are less reliable than those reported in ‘lesser’ journals. Vox health reporter Julia Belluz has recently covered the reliability of peer-review.

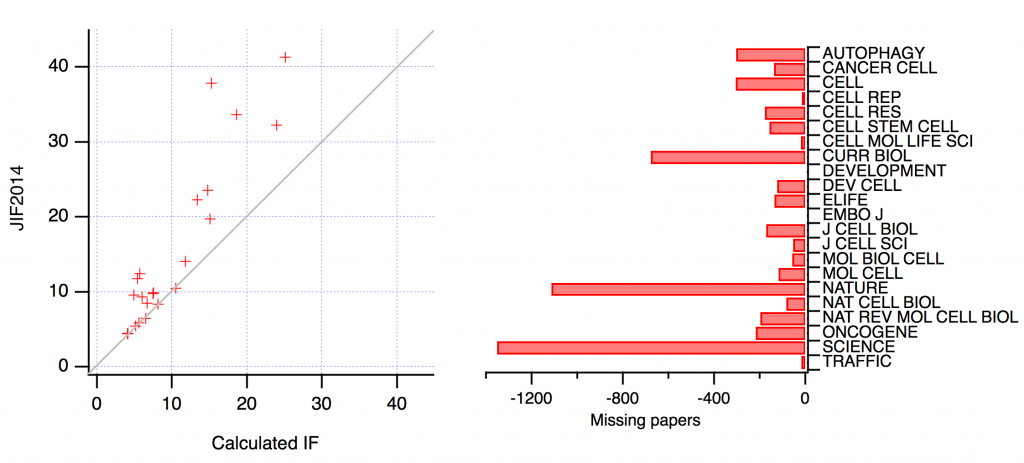

Over the last decade or two, there have been multiple accounts of how publishers have negotiated the impact factors of their journals with the “Institute for Scientific Information” (ISI), both before it was bought by Thomson Reuters and after. This is commonly done by negotiating the articles in the denominator.

tl;dr: It is a waste to spend more than the equivalent of US$100 in tax funds on a scholarly article. Collectively, the world’s public purse currently spends the equivalent of US$~10b every year on scholarly journal publishing. Dividing that by the roughly two million articles published annually, you arrive at an average cost per scholarly journal article of about US$5,000.

There have been calls for journals to publish the distribution of citations to the papers they publish (1 2 3). The idea is to turn the focus away from just one number – the Journal Impact Factor (JIF) – and to look at all the data. Some journals have responded by publishing the data that underlie the JIF (EMBO J, Peer J, Royal Soc, Nature Chem). It would be great if more journals did this.

A few days ago, Retraction Watch published the top ten most-cited retracted papers. I saw this post with a bar chart to visualise these citations. It didn’t quite capture what the effect (if any) a retraction has on citations. I thought I’d quickly plot this out for the number one article on the list.

In a big step forward for allowing proper credit to be provided to all of the awesome folks collecting and publishing data, the journal Global Ecology & Biogeography has just announced that they will start supporting an unlimited set of references to datasets used in a paper. These references will be included immediately following the traditional references section in both the html and pdf versions of the paper.

Last Christmas we gave you our heart; okay forget George Michael – we gave you beautiful imaging data sets, Virtual Machines, BYO data parties, GigaGitHub, more open peer review plus much more.