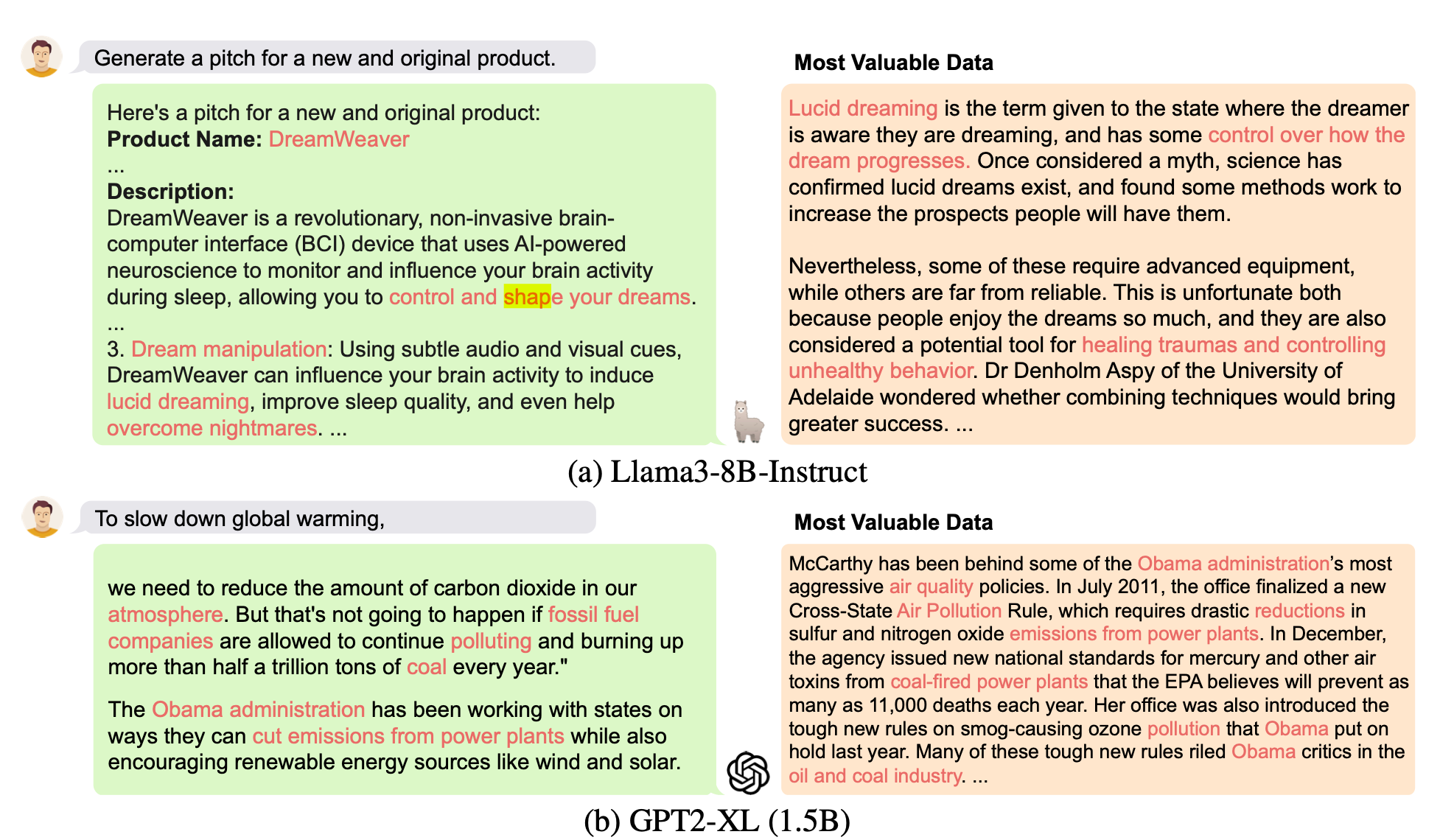

When a machine learning model is trained on a dataset, not all data points contribute equally to the model's performance. Some are more valuable and influential than others. Unfortunately value of data for training purposes is often nebulous and difficult to quantify. Applying data valuation to large language models (LLMs) like GPT-3, Claude 3, Llama 3.1 and their vast training datasets has faced significant scalability challenges to date.